Security leaders described a familiar scene of noisy dashboards, overlapping detections, and urgent tickets zigzagging between teams while attackers move at machine speed through cloud, identity, and endpoint gaps that refuse to align under pressure. Several CISOs framed tool sprawl as more than an inconvenience; it became a structural drag on risk reduction, with stacks of 30-plus tools working in parallel but rarely in concert. In their view, the AI surge amplified this divide, accelerating both detection and deception while leaving remediation workflows stuck in manual mode.

Practitioners from large enterprises and midmarket firms converged on the same pain point: inconsistent data. Different formats, naming conventions, and severity scales forced analysts to translate rather than triage. Some SOC leaders argued that consolidation alone doesn’t fix this, since “one throat to choke” still produces multiple feeds and teams. Others countered that consolidation helps only when paired with open integration, so exposure findings can flow end-to-end—from discovery to patch, config change, or code fix—without spreadsheet choreography.

The mechanics of unification: where standards, platforms, and teams converge

Platform architects and product heads emphasized that unification is an operating model, not a procurement slogan. The strongest outcomes surfaced when APIs, shared schemas, and role-aware playbooks sat under the same roof. Identity, endpoint, cloud, and network tools didn’t need to be from one vendor; they needed to speak a common language and pass decisions to the systems where people already work—ITSM for ops, repos and pipelines for developers, and SOAR for runbooks.

There was also agreement that business context had to be a first-class input. Asset criticality, data sensitivity, and exploit signals establish what matters now, not just what exists. Some leaders warned that “context sprawl” can mirror tool sprawl if it isn’t standardized, turning prioritization into a debate rather than a decision. The shared remedy: normalize the data, codify the rules, and automate the handoff.

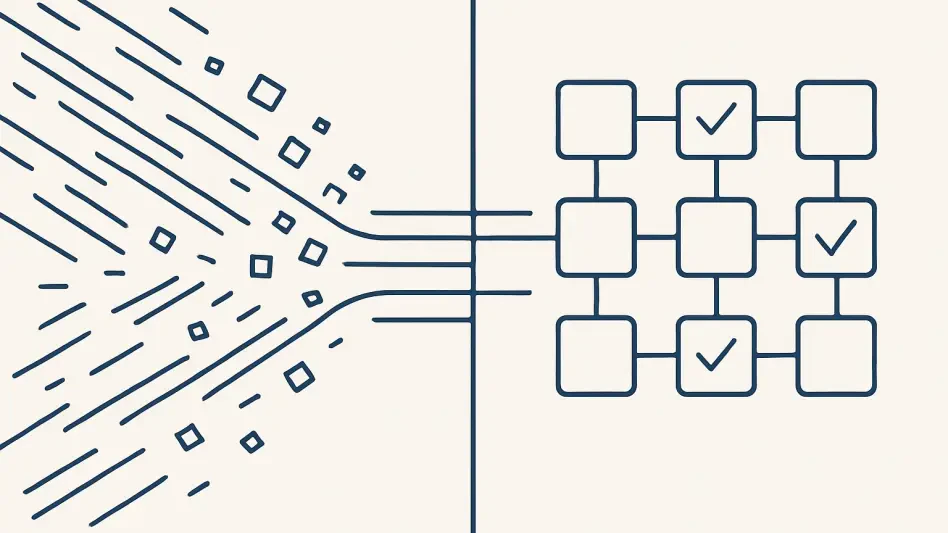

Normalizing the noise: OCSF and the emergence of a common cyber data language

Across interviews, OCSF surfaced as the pragmatic path to reduce normalization toil. Vendors described faster integrations and fewer edge cases when logs, alerts, and asset attributes mapped to a common schema. Security teams echoed this, noting that once OCSF aligned data fields, correlation made more sense and dashboards stopped arguing with each other. Rather than forcing every tool to perform custom translations, the schema absorbed the heavy lifting.

Skeptics did not dismiss OCSF; they questioned partial adoption. Some warned that “OCSF-washed” connectors can still smuggle in proprietary fields, reintroducing one-off rules. Others pointed to identity, cloud posture, and vulnerability domains that require careful extensions rather than maximalist mappings. Yet even critics agreed that embracing a shared baseline reclaims analyst time and clarifies cross-tool analytics.

Closing the loop: converting exposure signals into coordinated, automated fixes

Practitioners who reported the biggest gains focused on closure, not just detection. They described pipelines where a high-risk exposure combined exploit intel, asset value, and ownership, then auto-created a change in ITSM, kicked off patching, or opened a pull request with a pre-approved fix. Verification, they stressed, was equally automated—evidence of a successful patch, a suppressed alert, or a guardrail added to the pipeline.

Others highlighted the subtleties of change control. Blind automation can break workloads or violate maintenance windows. The consensus solution was guarded automation: workflows that require approvals at risk thresholds, respect SLAs by tier, and revert safely if checks fail. With these guardrails, time-to-remediate fell meaningfully, and “ticket ping-pong” gave way to an auditable trail from alert to action.

Platforms beat silos: real-world proof that open ecosystems outpace walled gardens

Platform teams compared outcomes across open and closed approaches. In open environments, EDR, CNAPP, SIEM, PAM, SOAR, and patching systems exchanged signals without translation debt, letting analysts pivot from an exposure to endpoint containment, cloud guardrails, and identity hardening in one motion. The same leaders reported cleaner handoffs to IT and dev because the action lived inside their native tools.

Supporters of closed suites argued for single-vendor simplicity. Yet customers recounted a cost: rigid roadmaps and integrations that favored the suite over the stack. In contrast, API-first platforms that validated connectors publicly delivered faster triage and fewer failed remediations. The message from the field: integration maturity consistently outweighed feature novelty when measured against actual risk reduction.

Beyond integration: cross-functional playbooks, ownership, and business context at work

Operations leaders underscored that workflows succeed when people agree on “who does what, when.” Playbooks that tied exposure severity to asset tiers, owners, SLAs, and approvals helped reduce friction at every handoff. Dev teams warmed to security tickets when they arrived with code context, reproducible steps, and deployment-safe windows. IT ops responded faster when tasks aligned with existing change models and maintenance rhythms.

Compliance and risk officers added that accountability mattered as much as speed. They favored dashboards that tracked closure rates by business service, not just by tool, and required evidence that fixes stuck. This blend of ownership and verification turned collaboration from a promise into a habit, with fewer escalations and clearer lines of responsibility.

Turning insight into outcomes: practical guidance for vendors and security leaders

Vendor leaders urged buyers to interrogate ecosystem commitment up front. They recommended asking for integration catalogs, OCSF mappings, and real proof that connectors trigger workflows in SIEM/SOAR, ITSM, identity, endpoint, and cloud. Several advised judging tools by how quickly they deliver a working use case—say, auto-prioritizing exploitable vulns on crown-jewel assets and closing them through patching with verification—rather than by demo breadth.

Enterprise teams emphasized measuring outcomes over activity. They cited metrics such as mean time to remediate by asset class, percentage of exposures resolved through automation, and reduction in duplicate alerts. Many cautioned against all-or-nothing automation; instead, they backed phased rollouts that start with high-confidence patterns and expand as playbooks mature. This approach built trust while surfacing edge cases early.

The road ahead: open standards as the operating system for exposure management

Participants agreed that market momentum raised the stakes. With cybersecurity spending projected at $213 billion in 2025 and $240 billion in 2026, inefficiency had become too expensive to tolerate. Leaders concluded that a slightly less feature-rich tool with excellent integrations delivered more risk reduction than a standout product that stood alone. The AI wave, coupled with cloud and IoT growth, had rewarded those who favored ecosystem fit and penalized those who clung to walled gardens.

The roundup closed on actionable next steps. Teams adopted OCSF to cut normalization time, demanded validated APIs across core categories, and encoded business context into playbooks that traveled with alerts. Vendors invested in joint roadmaps and co-engineered connectors rather than thin adapters. As a result, detection fed prioritization, prioritization powered coordinated changes, and changes were verified without manual chase. Readers were encouraged to explore standards documentation, integration reference guides, and outcome-driven case studies to deepen implementation paths and benchmark progress.