In an era where digital transformation accelerates at an unprecedented pace, the emergence of artificial intelligence (AI) agents as integral components of organizational workflows has introduced a new frontier in cybersecurity challenges, demanding urgent attention to secure access. These non-human identities (NHIs), unlike their human counterparts, operate with unique characteristics that defy traditional security measures, creating vulnerabilities that can be exploited if not addressed with urgency. As businesses increasingly rely on AI to automate processes and enhance efficiency, the question of how to secure access for these entities looms large. A recent survey indicates that a staggering 85% of organizations now view Identity and Access Management (IAM) as a fundamental pillar of their security strategy, highlighting a growing awareness of the risks at play. This pressing need to safeguard both human and non-human identities sets the stage for a deeper exploration into the complexities of managing AI-driven access in today’s interconnected landscape.

Rising Importance of Identity Management

The significance of IAM has surged as organizations grapple with the intricacies of a rapidly evolving technological environment. A notable shift in perspective reveals that the majority of businesses now prioritize identity security as a core element of their cybersecurity framework, driven by the realization that unprotected access points can lead to devastating breaches. With AI agents becoming commonplace in handling sensitive tasks, their integration into systems demands a reevaluation of how access is granted and monitored. These agents, often functioning through API tokens and cryptographic certificates, require dynamic provisioning and strict oversight to prevent unauthorized actions. The challenge lies in balancing operational efficiency with robust security protocols, ensuring that the benefits of automation do not come at the expense of vulnerability. As the digital workforce expands, the emphasis on IAM reflects a broader understanding that identity is no longer just a human concern but a critical asset across all operational domains.

Compounding this issue is the alarming lack of preparedness among many organizations to address the unique risks posed by NHIs like AI agents. Statistics paint a sobering picture: only a small fraction of companies possess a mature strategy for managing these entities, with many failing to apply consistent governance standards across their digital and human labor forces. This gap in readiness is particularly concerning given the projected rise in NHI usage over the next 12 to 18 months, alongside plans by a significant majority to increase investments in workforce identity security. The anxiety surrounding potential damage from mismanaged AI access is palpable, with numerous executives voicing concerns about the absence of clear accountability mechanisms. Without a cohesive approach, businesses risk exposing sensitive data to threats that are difficult to trace or mitigate, underscoring the urgency of developing comprehensive IAM frameworks tailored to the nuances of non-human actors.

Challenges in Controlling AI Agent Access

One of the most pressing hurdles in securing AI agents lies in controlling their access and permissions within organizational systems. A substantial number of surveyed businesses—nearly 78%—identify this as a primary concern, pointing to the difficulty of managing entities that lack the inherent accountability of human users. Unlike traditional employees, AI agents often operate with short, dynamic lifespans, necessitating rapid provisioning and de-provisioning processes that can strain existing security measures. Furthermore, their use of non-human authentication methods complicates efforts to ensure secure interactions with critical systems. The potential for these agents to access privileged information without clear ownership adds another layer of risk, as post-breach audits become challenging without consistent logging. This intricate web of issues highlights the need for granular, time-limited permissions to curb exposure and protect against misuse.

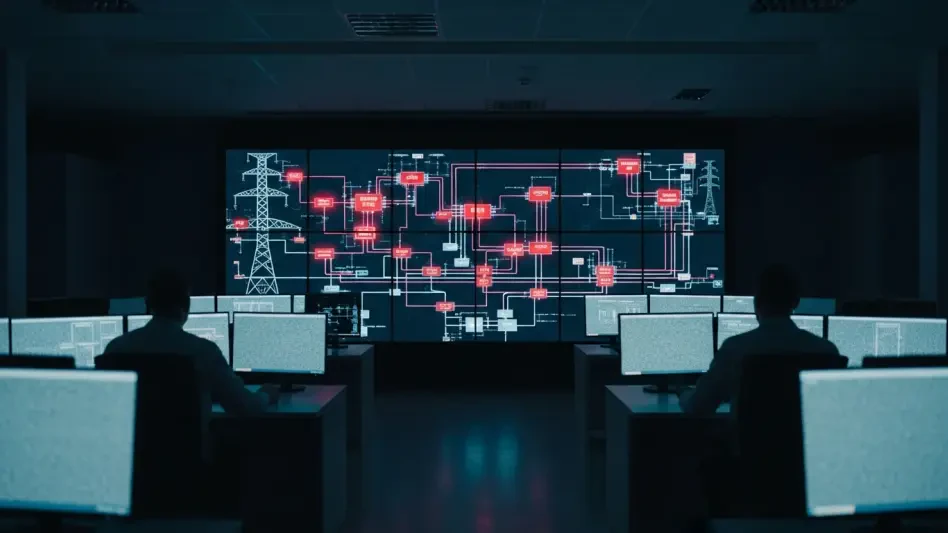

Beyond access control, organizations face additional obstacles in achieving visibility and lifecycle management for AI agents. A significant portion of companies report poor visibility into NHI activities, making it difficult to detect anomalies or unauthorized actions in real time. Lifecycle management poses its own set of problems, as the transient nature of AI agents requires continuous updates to access policies that many systems are ill-equipped to handle. The remediation of risky NHI accounts further complicates matters, with over half of businesses struggling to address vulnerabilities promptly. These challenges collectively create an environment where the potential for AI-driven systems to become attack vectors is heightened, especially if oversight remains fragmented. Addressing these gaps demands innovative solutions that prioritize real-time monitoring and adaptive governance to keep pace with the evolving role of AI in enterprise settings.

Strategies for Securing Non-Human Identities

To tackle the mounting risks associated with AI agents, adopting rigorous IAM controls for non-human identities is imperative, mirroring the standards applied to human workforces. Experts advocate for a unified security policy that encompasses contractors, vendors, and partners, ensuring no entity operates outside the protective framework. This approach involves integrating key stakeholders—such as data officers and business leaders—into governance models to foster a holistic understanding of access needs. Embedding security through enhanced visibility, lifecycle access controls, and threat detection mechanisms can significantly reduce exposure to risks. Additionally, a secure-by-design philosophy is recommended, incorporating robust user authentication and API controls to safeguard workflows involving AI agents. Such measures aim to create a resilient infrastructure capable of adapting to the unique demands of non-human access.

Another critical strategy involves redefining the security category for AI agents, recognizing that they neither fit neatly into human nor machine classifications. Tying each agent to a human overseer can establish clearer lines of accountability, while implementing policies to prevent and detect improper activity adds a vital layer of protection. Solutions that enable real-time threat detection and response are essential, as they address the dynamic nature of AI operations and mitigate potential damage before it escalates. The projected increase in investment toward identity security signals a willingness to prioritize these efforts, but success hinges on overcoming internal disarray, such as siloed teams and inconsistent adoption strategies. By fostering collaboration across departments and aligning security practices with organizational goals, businesses can build a foundation that supports both innovation and protection in an AI-driven landscape.

Building a Future-Ready Security Framework

Reflecting on the journey of identity security, it became evident that organizations had long recognized the pivotal role of IAM in safeguarding their digital ecosystems against evolving threats. The heightened awareness of risks tied to AI agents and other NHIs prompted a wave of concern, with many acknowledging the gaps in strategic readiness despite their intentions to bolster investments. The discourse around managing access for these entities revealed a shared urgency to establish stricter guardrails and cohesive governance models.

Looking ahead, the path forward demanded actionable steps that went beyond mere recognition of the problem. Businesses were encouraged to prioritize the development of centralized governance frameworks that integrated security from the ground up, ensuring that AI agents operated within defined boundaries. Collaborating with stakeholders to embed visibility and threat detection into every layer of operations emerged as a key focus, alongside the adoption of secure-by-design principles. As the landscape continued to evolve, staying proactive through adaptive policies and cross-functional alignment offered the best chance to harness AI’s potential while safeguarding critical systems against emerging risks.