In an era where digital infrastructure serves as the backbone of global commerce and communication, a single glitch can ripple across the internet with devastating consequences, as seen last week with Cloudflare Inc. This San Francisco-based cybersecurity giant, known for safeguarding millions of websites against distributed denial-of-service (DDoS) attacks, found itself at the center of a massive disruption. Ironically, the outage that affected countless online services worldwide wasn’t the result of an external threat but a self-inflicted wound caused by an internal software bug. This incident, which halted operations for numerous businesses and frustrated users globally, exposed the hidden vulnerabilities even in the most robust systems. It serves as a stark reminder that as technology advances, the complexity of maintaining seamless operations grows exponentially, leaving room for unexpected failures. The event has sparked intense discussion in the cybersecurity community about the balance between innovation and reliability in protecting the digital world.

Unraveling the Software Glitch

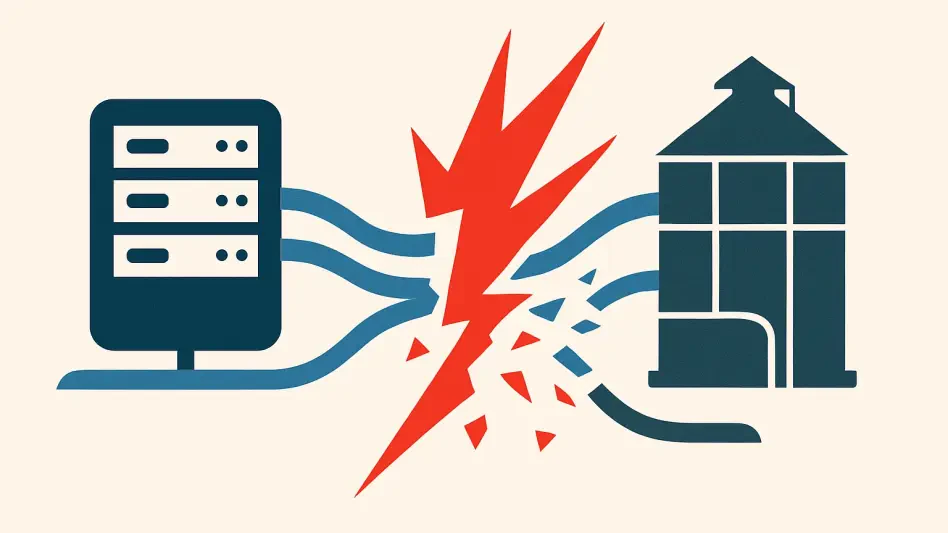

The root of the global outage traced back to a seemingly innocuous update in Cloudflare’s API layer, intended to optimize traffic management for its vast network of clients. However, hidden within this routine enhancement was a critical logic error that unleashed chaos across the company’s infrastructure. When deployed, the flawed code triggered a series of recursive calls within the API gateway, generating an uncontrollable flood of internal requests. Within minutes, millions of queries bombarded Cloudflare’s servers, mimicking the characteristics of a traditional DDoS attack but originating from within. This self-inflicted surge overwhelmed system resources, leading to significant latency spikes and connection failures that persisted for nearly two hours. The incident disrupted services for a staggering number of websites and enterprise networks, highlighting how a minor oversight in code can escalate into a major operational crisis. Customer frustration quickly surfaced across social platforms, with many questioning how a leader in cybersecurity could falter so dramatically.

Compounding the issue was the failure of Cloudflare’s own protective mechanisms to identify the internal nature of the traffic flood. Designed to combat external threats, these safeguards were blindsided by the anomaly, unable to distinguish between legitimate operations and the destructive loop caused by the bug. Company representatives later described the situation as an unprecedented convergence of errors, where multiple layers of defense were bypassed due to the unique origin of the attack. This oversight revealed a critical gap in internal monitoring, as the systems prioritized external threat detection over potential self-sabotage. The outage not only disrupted service delivery but also exposed the intricate dependencies within modern cybersecurity frameworks, where a single point of failure can cascade into widespread impact. Industry observers noted that such incidents underscore the challenges of managing increasingly complex software environments, especially for firms handling global-scale traffic.

Contrasting Strengths and Vulnerabilities

Cloudflare’s reputation as a formidable defender against digital threats was recently bolstered by its success in mitigating a record-breaking DDoS attack of 11.5 terabits per second, neutralizing it in under a minute. This feat showcased the strength of its automated defenses and reinforced trust among clients who rely on the company to protect their online assets from sophisticated external assaults. The rapid response to such a massive threat demonstrated the effectiveness of Cloudflare’s infrastructure in handling crises orchestrated by malicious actors, often leveraging compromised IoT devices. Yet, the self-DDoS incident starkly contrasted this triumph, revealing a vulnerability not from outside forces but from within the company’s own code. It highlighted a sobering reality: even the most advanced systems are susceptible to human error, especially as software updates and integrations become more frequent and intricate in response to evolving threats.

This duality of strength and fragility has sparked broader conversations within the cybersecurity sector about the risks of rapid innovation. As companies like Cloudflare integrate cutting-edge technologies such as AI-driven threat detection, the potential for unintended consequences grows, particularly during accelerated development cycles. Analysts point out that while external attacks are often anticipated and countered with robust protocols, internal missteps can be just as disruptive, yet harder to predict. The outage served as a wake-up call, emphasizing that the pursuit of technological advancement must be matched with meticulous testing and oversight. For Cloudflare, which supports over 30 million internet properties, maintaining client confidence hinges on addressing these internal weaknesses. The incident illustrated that reliability isn’t solely about fending off attackers but also about safeguarding against self-inflicted disruptions in an era of complex digital dependencies.

Cloudflare’s Response and Industry Implications

In the aftermath of the outage, Cloudflare adopted a transparent approach by releasing a comprehensive postmortem on its engineering blog, detailing the root cause of the self-DDoS event. The company acknowledged the logic error in the API update and outlined immediate steps to prevent recurrence, including enhanced simulation testing for future deployments. Leadership also committed to integrating machine learning models to better distinguish between normal internal operations and anomalous patterns that could signal trouble. This proactive stance aimed to reassure clients and stakeholders of Cloudflare’s dedication to resilience, both against external threats and internal flaws. Despite the temporary dent in its reputation, with some competitors seizing the opportunity to highlight their own stability, the response was largely viewed as a step in the right direction by industry watchers who recognize the inevitability of such challenges in a fast-evolving field.

Beyond Cloudflare’s actions, the incident shed light on broader implications for the cybersecurity industry, where the balance between innovation and operational dependability remains delicate. The event underscored the necessity of rigorous auditing and internal traffic monitoring to prevent self-sabotage, especially as digital infrastructure becomes increasingly vital to global economies. Experts argue that redundancy and robust testing protocols are no longer optional but essential for firms managing critical online services. While Cloudflare remains a market leader, the outage momentarily shook trust among clients who expect uninterrupted uptime, prompting a reevaluation of risk management strategies across the sector. This situation serves as a cautionary tale, reminding companies that the greatest threats can sometimes emerge from within, hidden in lines of code rather than external malice, and must be addressed with equal vigilance.

Lessons Learned for Future Safeguards

Reflecting on the disruption, the self-DDoS incident became a pivotal moment for Cloudflare to reassess and strengthen its internal safeguards. The mishap, though resolved within a couple of hours, left an indelible mark on millions of users and businesses reliant on seamless connectivity. It exposed how even minor errors in routine updates could spiral into global outages if unchecked, driving home the importance of preemptive measures. The company’s subsequent focus on advanced testing and machine learning integration signaled a commitment to learning from past oversights. This approach not only aimed to rebuild trust but also positioned Cloudflare to potentially set new standards for internal resilience in the cybersecurity realm, where such incidents had previously been rare but impactful.

Looking ahead, the broader industry took note of the need for enhanced protocols to mitigate similar risks, recognizing that internal errors posed as significant a threat as external attacks. The event prompted discussions on developing more sophisticated monitoring tools capable of detecting anomalies regardless of origin. For stakeholders, the takeaway was clear: balancing rapid technological advancement with stability required investment in redundancy and foresight. As Cloudflare implemented these lessons, the focus shifted to actionable strategies like comprehensive code reviews and stress testing under simulated conditions. This forward-looking perspective aimed to ensure that future innovations would be fortified against self-inflicted disruptions, safeguarding the digital ecosystem for years to come.