A senior finance officer stared at a gallery of familiar faces on a live call and sent $25 million in minutes, and not a single server, inbox, or network had been breached. The faces, voices, and mannerisms looked right; the cadence of the CFO’s instructions matched prior meetings. Later, investigators found the video participants were synthetic, the audio cloned, and the meeting engineered end to end to trigger a routine approval at scale.

The incident mirrored a now-famous case at Arup in Hong Kong, where a videoconference paved the way for a massive transfer. It was the kind of attack that did not need zero-days or lateral movement—only a convincing performance and a workforce trained to trust what they see and hear in fast-moving workflows.

Why this matters now

Deepfakes moved from curiosity to credible threat because the barrier to entry collapsed. Real-time face swaps now run on consumer GPUs, and a single social photo can seed a convincing overlay. Synthetic voices are even easier to spin up, turning a few seconds of audio into a serviceable clone that passes casual checks and rushed callbacks.

The trend line is clear. Industry trackers reported deepfake attempts every five minutes last year, with 6.5% of fraud cases now linked to synthetic media—an explosive rise from the fringe. Losses topped $200 million across 163 documented incidents in the first quarter alone, signaling that this is no longer an edge case but a mainstream fraud vector with board-level implications.

Trust itself has become the attack surface. Under deadline pressure, visual and audio cues short-circuit judgment, especially when communications arrive in familiar formats: a scheduled meeting, a polite help-desk request, an onboarding call. As one identity CTO put it, “Most people can’t reliably tell under normal work conditions,” which is why defenses must verify presence, not just appearance.

Inside the new playbook

Today’s deepfake kits aim for speed and plausibility. Off-the-shelf tools deliver live face overlays from a single enrollment photo, while low-compute pipelines keep latency under the threshold most humans notice. The goal is not cinematic perfection but social believability—the split second when a nod, blink, or laugh disarms doubt long enough to move money or grant access.

Attackers have widened the aperture beyond one-off wire fraud. Reports tied North Korean operatives to deepfake-enabled remote hiring schemes, using blended identities and polished video interviews to win IT roles with network proximity. Once inside, these hires can pivot to vendor portals and shared credentials, extending exposure through the supply chain without tripping traditional intrusion alarms.

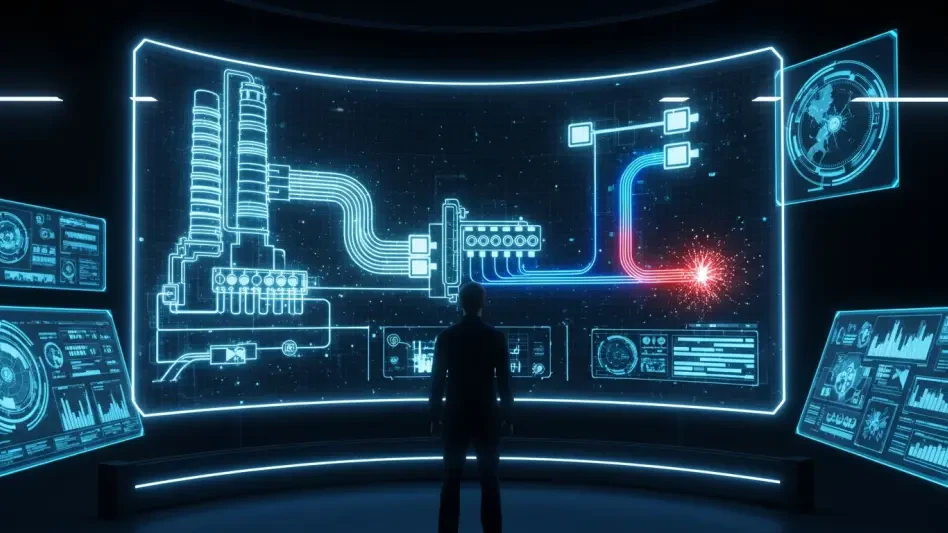

New tradecraft digs beneath the camera frame. Injection attacks feed synthetic streams straight into device inputs, bypassing the lens and defeating simple liveness checks. Jailbroken phones and sideloaded capture apps can route pre-rendered media as if it were live footage, which is why camera-path integrity and endpoint attestation have become critical layers in modern identity stacks.

Where the frontline breaks

The highest-risk environments are often the most ordinary. Contact centers process outsized volumes of approvals, password resets, and payment changes, leaving little time for skepticism. Help desks that “verify by voice” face a new reality: real-time clones now sail through callbacks if process controls rely on familiarity rather than cryptographic proof.

Shared terminals present a different challenge. In warehouses, clinics, and branch locations, employees rotate through kiosks with limited second factors and constrained peripherals. Here, even minor friction can stall operations, which tempts teams to relax controls. That gap is exactly where low-friction synthetic media thrives—short interactions, quick approvals, and minimal scrutiny.

Analysts describe a convergence in the threat picture. Gartner estimated that 62% of organizations encountered deepfake-driven social engineering or automated exploitation, arriving in parallel with AI-powered application-layer attacks. The public sector frames it similarly. DHS guidance underscores that synthetic media need not be perfect to mislead; it only needs to be good enough to fit a familiar script under real-world conditions.

What must change next

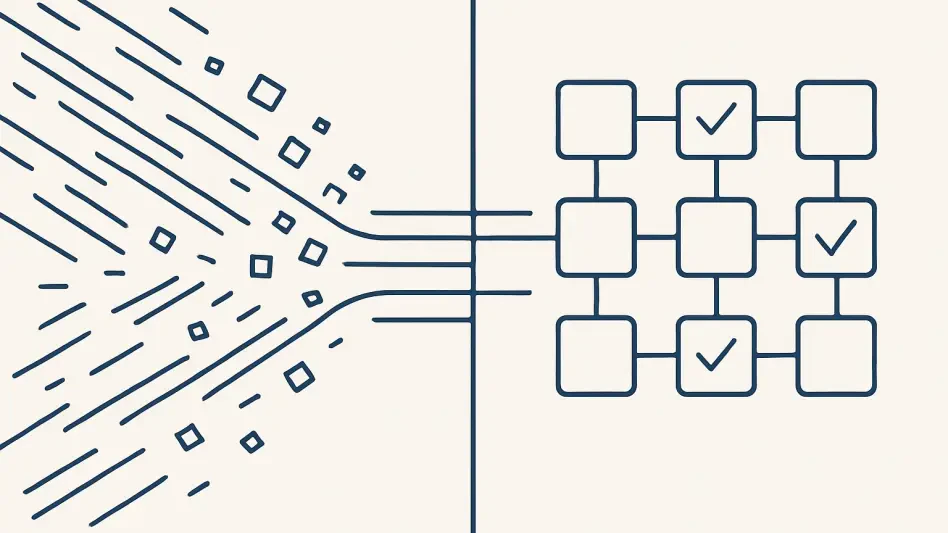

The verification goal must shift from “is it a face?” to “is it a live, present person controlling this session right now?” That means active liveness with challenge-response, controlled illumination, and integrity checks that confirm the camera feed has not been injected or replayed. Binding approvals to real-time presence, rather than static biometrics, neutralizes many of the easiest attacks.

Security teams are also hardening the capture pipeline end to end. Device attestation, jailbreak detection, and driver-level protections reduce the chance that synthetic media can be piped into the stack. Where risk is highest, organizations are pairing software with secure cameras and liveness-capable sensors to raise the bar beyond what commodity tools can spoof in real time.

Procurement is becoming a sorting mechanism. Buyers increasingly require alignment with the NIST Digital Identity Guidelines and independent presentation attack detection results from European labs such as CLR. Vendors that substantiate false accept and false reject rates against known attack kits earn trust faster because their claims map to recognized assurance baselines rather than marketing gloss.

Privacy-preserving design is part of the answer. Zero-knowledge templates, on-device matching, and minimal retention reduce the blast radius if data leaks. In frontline contexts, friction-light biometric flows tuned for kiosks and contact centers help maintain throughput while proving presence. Meanwhile, instrumented telemetry, threat intel subscriptions, and quarterly red-team deepfake drills keep defenses current as attacker tooling evolves.

Leaders inside affected organizations are reframing the problem. Arup’s CIO characterized the videoconference theft as social engineering rather than a classic cyber breach, a distinction that pulls finance, HR, and operations into the solution space. The practical playbook has already expanded: out-of-band callbacks with cryptographic confirmation, just-in-time transaction holds based on contextual risk, and multi-person verification for high-value actions became standard rather than exceptions.

The feature of this moment was not that technology failed; it was that trust got gamed. As vendors rolled out stronger liveness and anti-injection controls and standards bodies tightened assurance expectations, the path forward took shape: prove presence, protect the pipeline, and train people to recognize when confidence feels a little too easy. Organizations that acted on those steps had already cut exposure, raised attacker costs, and moved the battleground back toward terrain they could defend.