The astonishing speed at which generative AI is transforming software development presents a dual-edged sword, acting as both a powerful catalyst for innovation and a significant multiplier of inherent security risks. While the industry focuses on defending against sophisticated external threats, a more insidious danger is growing from within, originating not from malicious actors but from the very development processes designed to build and improve software. The primary challenge now stems from the sheer volume of AI-assisted code changes, which, when combined with relentless pressure for rapid deployment, magnifies the potential for introducing security flaws that can have devastating consequences. The most common source of cybersecurity incidents is no longer a complex attack vector but the quiet accumulation of small, incremental code alterations made by developers daily, a problem that AI is now accelerating to a critical new level. This shift demands a radical rethinking of how security is integrated into the software development lifecycle.

The Amplified Risk of Incremental Changes

Long before the widespread adoption of AI, the foundation of modern software security was already being tested by the relentless pace of development. The typical developer commits new code nearly three times per working day, a rate that translates into thousands of individual changes annually for even a moderately sized company. While each of these commits is essential for maintenance, feature enhancement, and innovation, every single alteration also represents a potential entry point for a new vulnerability. This constant stream of modifications creates a vast attack surface that traditional, end-of-cycle security validation processes struggle to cover adequately. The sheer volume of changes means that even with dedicated QA teams, subtle flaws can easily slip through the cracks, accumulating over time until they form a significant security debt that leaves the organization exposed to breaches originating from seemingly innocuous internal updates.

This pre-existing challenge is now being supercharged by the integration of generative AI into developer workflows. Major technology companies are reporting that between 20% and 30% of their new code is now being generated by AI assistants, a figure that signals a fundamental shift across the entire industry. This dramatic acceleration, however, comes with a critical security trade-off. Recent studies indicate that an alarming 45% of AI-generated code contains security weaknesses, including common and dangerous vulnerabilities cataloged in the OWASP Top 10. Worryingly, this rate has shown no signs of improvement even with the introduction of newer, more advanced AI models. As development velocity continues to climb, the volume of potential security flaws being injected directly into software ecosystems is rising in direct proportion, creating a scenario where the speed of innovation is outpacing the capacity for effective security oversight.

When Speed Overrides Security

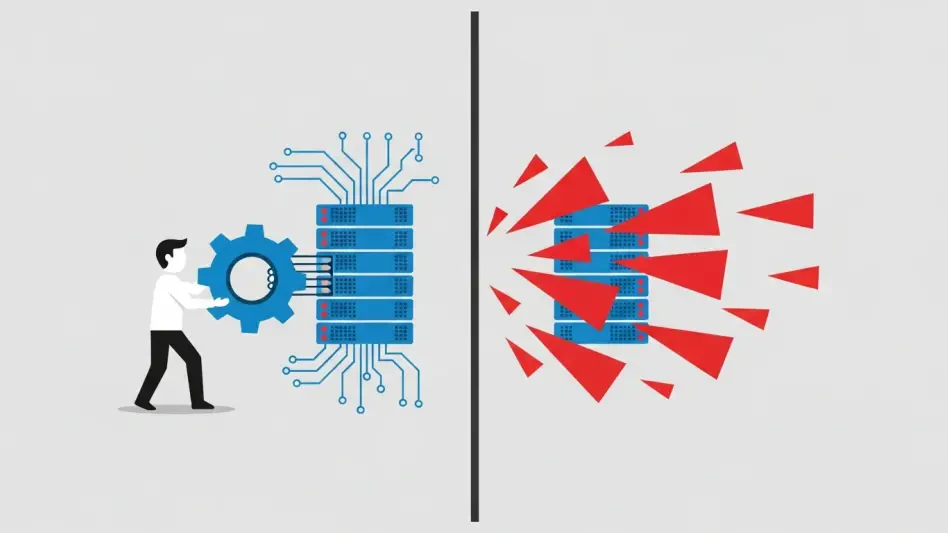

At the core of this escalating risk is the persistent and often unbalanced conflict between development speed and code quality. Recent industry research reveals a troubling trend: a significant majority of organizations, approximately 63%, admit to knowingly releasing code to production without conducting comprehensive testing. This practice is driven almost entirely by the intense and unrelenting pressure to deliver new features and updates faster than the competition. Consequently, quality assurance processes are frequently truncated, with teams prioritizing the verification of basic functionality while more subtle but critical security tests are often deferred or skipped entirely. These neglected checks include vital validations for issues such as data isolation failures, insecure direct object references, or server misconfigurations—vulnerabilities that, while not immediately obvious, can be exploited by attackers with severe consequences.

The tangible impact of prioritizing speed over security was starkly demonstrated by the data exposure incident at Vanta, a company specializing in compliance and security automation. The breach, which affected hundreds of its customers, was not the result of a sophisticated external hack but stemmed from a single, erroneous internal code change that was deployed to production. This event serves as a powerful illustration of the central argument: even for a company built on the premise of trust and security, a minor oversight in the development and testing process can lead to severe reputational damage and customer churn. The subsequent customer reactions, documented across public forums, underscored the high expectations users have for data security and the immediate financial and brand-related fallout that occurs when those expectations are not met, proving that internal code hygiene is as critical as external defense.

Actionable Strategies for a New Era of Development

To effectively navigate this transformed landscape, security leaders must champion a paradigm shift that elevates software testing from a procedural quality assurance function to a non-negotiable, front-line security control. This transformation begins with the widespread adoption of a “shift-left” approach, which involves integrating robust security controls and automated testing much earlier in the development lifecycle. In an era of AI-accelerated development, testing cannot remain a stage-gate; it must become a continuous, automated process that is executed with every single commit and pull request. This ensures that vulnerabilities are identified and remediated the moment they are introduced, not days or weeks later. Furthermore, organizations must realign their performance metrics to create a culture where speed and security are treated as symbiotic goals. Instead of measuring success solely by release frequency, teams should also be evaluated on KPIs such as a decreasing vulnerability density and a low defect escape rate, thereby incentivizing the creation of safe, secure releases.

Modernizing the underlying testing infrastructure and fostering a culture of shared responsibility were the final, crucial steps needed to keep pace. Traditional, manual testing methods became obsolete in the face of the high volume and velocity of AI-assisted code production, necessitating investment in automated, scalable testing frameworks. These modern tools provided the comprehensive and rapid feedback required to support a high-speed development environment without sacrificing resilience. Ultimately, security was established as a collective responsibility, breaking down the operational silos between development, quality assurance, and security teams. This strategic move strengthened cross-functional collaboration and ensured the testing regimen was holistic, covering not only functionality and performance but also a full suite of security-focused tests, including static and dynamic code scanning, threat modeling, and configuration validation. This created a durable culture of “code hygiene,” where prevention through rigorous and early testing became the most effective defense.