In an era where cyber threats evolve at an alarming rate, the promise of artificial intelligence (AI) as a shield against digital vulnerabilities has captured the attention of organizations worldwide, with high-profile breaches making headlines and the complexity of attacks increasing. Many have turned to AI-driven tools hoping for a silver bullet that can detect and neutralize threats before they strike. Yet, amidst this enthusiasm, a critical question lingers: can AI truly be relied upon to provide comprehensive protection in the ever-shifting landscape of cybersecurity? This technology, while groundbreaking in its ability to identify software flaws at scale, comes with limitations that could leave systems exposed if not addressed. Drawing from expert insights, including those of Rob Joyce, a former senior cybersecurity official, this discussion delves into the dual nature of AI as both a powerful ally and a potential liability in safeguarding digital infrastructure against sophisticated threats.

The Power and Promise of AI in Vulnerability Detection

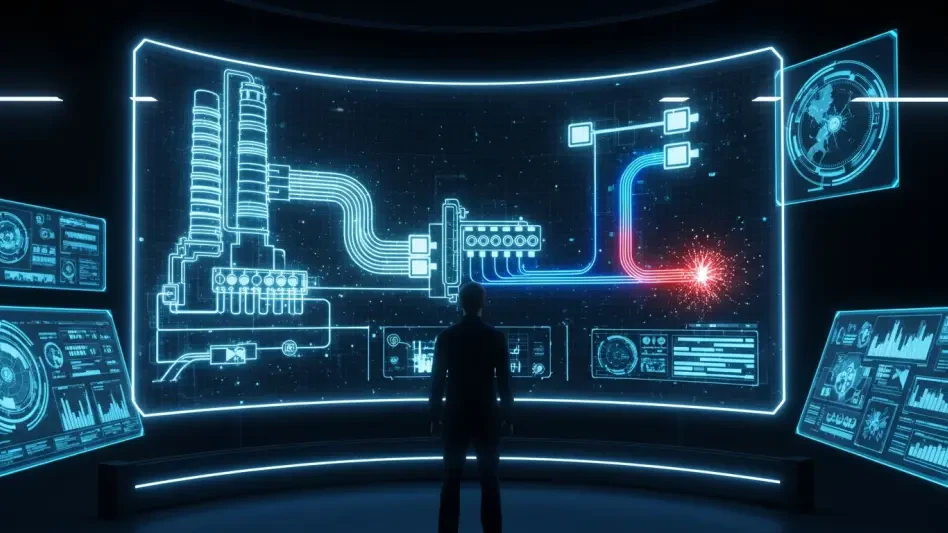

AI’s capacity to detect software vulnerabilities has redefined the boundaries of cybersecurity, surpassing human capabilities in both speed and scale. Tools powered by this technology, such as advanced AI agents, have demonstrated remarkable prowess by scanning networks tirelessly and identifying flaws that might elude even seasoned professionals. Their ability to process vast amounts of data in real time offers an unprecedented advantage, allowing organizations to uncover hidden weaknesses before they can be exploited. This transformative potential has positioned AI as a cornerstone of modern defense strategies, with many companies integrating these systems to bolster their security posture. However, while the detection capabilities are impressive, they represent only the first step in a much larger process. The true challenge lies in translating these findings into actionable protection, a hurdle that many organizations are unprepared to overcome given existing resource constraints and systemic inefficiencies.

Beyond detection, the sheer volume of vulnerabilities unearthed by AI can be overwhelming, often outpacing the ability to respond effectively. Large tech firms with dedicated teams may manage to keep up, but smaller entities or those reliant on outdated infrastructure frequently struggle to implement patches swiftly. This disparity creates a dangerous gap where identified flaws remain unaddressed, leaving systems vulnerable to exploitation by malicious actors who are quick to capitalize on such weaknesses. The rapid pace of AI-driven discovery, while a testament to its strength, also amplifies the risk of a crisis if remediation efforts lag behind. Experts caution that without a robust framework for addressing these issues, the very technology designed to protect could inadvertently heighten exposure to threats, underscoring the need for a balanced approach that prioritizes both identification and resolution in equal measure.

The Hidden Risks of AI Integration in Corporate Systems

While AI offers undeniable benefits in detecting vulnerabilities, its integration into corporate environments introduces new risks that cannot be ignored. When connected to sensitive systems like email platforms or internal knowledge bases, AI tools can become unintended gateways for cybercriminals seeking to infiltrate networks. Sophisticated attackers, including state-sponsored groups driven by financial gain, have already begun exploiting these technologies to access proprietary data, fueling schemes like ransomware and extortion. This emerging threat highlights a critical oversight in the rush to adopt AI: the potential for these tools to be weaponized against the very organizations they are meant to protect. As such, securing AI systems themselves must become a priority, ensuring they are not transformed into liabilities through inadequate safeguards or oversight.

Adding to this complexity is the reality that AI’s capabilities are a double-edged sword, often amplifying existing weaknesses within an organization’s infrastructure. For instance, legacy software or unsupported systems, common in many industries, exacerbate the challenges of timely remediation even as AI uncovers flaws at an accelerated rate. This mismatch between detection and response can create a perfect storm, where vulnerabilities pile up faster than they can be addressed, inviting catastrophic breaches. Experts warn of scenarios akin to devastating natural disasters, where systems could collapse under the weight of unmitigated risks before stronger defenses can be rebuilt. Addressing this requires not just technological investment, but a cultural shift toward proactive maintenance and vigilance, ensuring that the adoption of AI does not outstrip the capacity to manage its revelations or secure its presence within critical operations.

Striking a Balance for Future Security

Reflecting on the insights shared by cybersecurity veterans, it becomes evident that AI has reshaped the battle against digital threats with its unparalleled ability to spot vulnerabilities. Yet, the journey from detection to protection reveals significant gaps, as many organizations grapple with outdated systems and limited resources to patch flaws swiftly. The warnings about AI tools becoming entry points for cyberattacks serve as a stark reminder of the technology’s dual nature, demanding rigorous safeguards to prevent exploitation by malicious actors.

Looking ahead, the path to leveraging AI effectively in cybersecurity hinges on addressing these shortcomings with actionable strategies. Prioritizing rapid remediation processes must go hand in hand with AI deployment, ensuring that identified vulnerabilities are not left unresolved. Equally critical is the fortification of AI systems themselves, protecting them from becoming conduits for breaches. By fostering a culture of preparedness and investing in both technology and training, organizations can harness AI’s potential while mitigating its risks, paving the way for a more resilient digital future.