In the ever-evolving landscape of cybersecurity, insider threats remain one of the most elusive and damaging risks to organizations worldwide, often slipping through the cracks due to their subtle nature and the trusted access of those involved. These threats, stemming from employees or contractors with legitimate system access, can lead to devastating breaches, whether through intentional malice or unintentional errors. The challenge for security teams lies in the scarcity of realistic data to train detection systems, leaving many organizations exposed to potential harm. A groundbreaking solution has emerged in the form of Chimera, an AI-driven tool that leverages large language models (LLMs) to simulate both benign and malicious behaviors in enterprise settings. By generating synthetic yet authentic datasets, Chimera offers a promising avenue to transform how insider threats are identified and mitigated. This exploration delves into the tool’s capabilities, its impact on current systems, and its potential to reshape cybersecurity defenses.

Unveiling Chimera’s Innovative Approach

Overcoming the Data Drought in Cybersecurity

Chimera stands out as a pioneering force by addressing the critical shortage of usable data for insider threat detection, a problem that has long hindered the development of effective security measures. Traditional datasets, such as CERT, often lack the depth and variety needed to reflect the intricate patterns of real employee behavior, while actual organizational logs are rarely shared due to privacy concerns. Chimera counters this by employing LLM-based multi-agent simulations to create ChimeraLog, a massive dataset with approximately 25 billion log entries from a simulated month. Spanning diverse activities like login events, email exchanges, and file operations, this dataset also incorporates 15 distinct insider attack scenarios, from intellectual property theft to sabotage. By enabling organizations to tailor simulations to their specific infrastructure and hierarchy, Chimera ensures the resulting data is both relevant and actionable for training detection models with heightened precision.

The significance of Chimera’s approach extends beyond sheer volume to the realism it injects into cybersecurity training environments. Unlike older datasets that often oversimplify human actions, Chimera replicates complex organizational dynamics across sectors like technology, finance, and healthcare. Virtual employees are assigned roles, responsibilities, and even personality traits, with some designated as insider threats who execute covert malicious activities amidst routine tasks. This nuanced simulation provides security teams with a testing ground that mirrors real-world challenges more closely than ever before. Furthermore, by operating locally without exposing sensitive information, Chimera addresses privacy concerns head-on, allowing organizations to build robust detection systems without risking data leaks. This balance of realism and security marks a significant step forward in preparing for the unpredictable nature of insider threats.

Highlighting Gaps in Existing Detection Systems

When tested against ChimeraLog, many current detection models reveal stark shortcomings, underscoring the limitations of training on less realistic datasets. Models that previously achieved high accuracy on older sets like CERT experienced a notable performance drop, with F1-scores dipping to around 0.83 when faced with Chimera’s intricate data. This decline is particularly pronounced in scenarios like finance, where attack patterns are often more subtle and harder to distinguish from normal activity. Such results expose a critical need for detection systems to evolve beyond outdated benchmarks and adapt to the sophisticated, context-rich environments that Chimera simulates. The tool’s ability to challenge existing models highlights the urgency of rethinking training methodologies to better prepare for real-world insider threats.

Beyond merely identifying weaknesses, Chimera offers insights into how detection systems can improve through exposure to diverse and realistic data. The performance disparity seen in testing suggests that many models are overfitted to simpler datasets, failing to generalize across varied scenarios. In contrast, models trained on ChimeraLog demonstrate improved adaptability, particularly in recognizing high-level behavioral anomalies such as irregular working hours or unusual file access patterns. This indicates that access to comprehensive, scenario-specific data can significantly enhance a model’s ability to detect threats in different contexts. For cybersecurity professionals, these findings serve as a call to action to leverage tools like Chimera to refine detection capabilities, ensuring systems are not just reactive but proactive in identifying potential risks before they escalate into full-blown incidents.

Shaping the Future of Cybersecurity Defenses

Pioneering AI-Driven Solutions for Threat Detection

Chimera represents a broader shift toward AI-driven simulations as a solution to persistent data scarcity in cybersecurity, heralding a new era of innovation in threat detection. By automating the generation of scalable, realistic datasets, the tool enables the development of more robust systems capable of identifying complex behavioral patterns that transcend specific organizational settings. Experts in the field agree that such advancements are vital for moving beyond static, outdated datasets that fail to capture the nuances of modern threats. Whether it’s detecting unusual access to sensitive files or flagging erratic work schedules, Chimera’s simulations provide a foundation for building detection mechanisms that are both adaptive and forward-thinking. This trend toward AI-powered tools signals a transformative moment for the industry, where technology can finally keep pace with the evolving tactics of insider threats.

The implications of Chimera’s technology extend to fostering a more proactive approach to cybersecurity across diverse sectors. As simulations cover environments ranging from technology firms to healthcare providers, they offer a versatile platform for testing and refining detection strategies tailored to unique industry challenges. Plans to expand Chimera’s applicability to additional organizational types, such as research institutions, further amplify its potential impact. By integrating community-driven enhancements and advanced protocols, the tool could evolve into a collaborative resource that benefits the entire cybersecurity ecosystem. For organizations striving to stay ahead of internal risks, adopting such AI-driven solutions could mean the difference between vulnerability and resilience, paving the way for smarter defenses that anticipate threats rather than merely respond to them.

Safeguarding Data While Advancing Capabilities

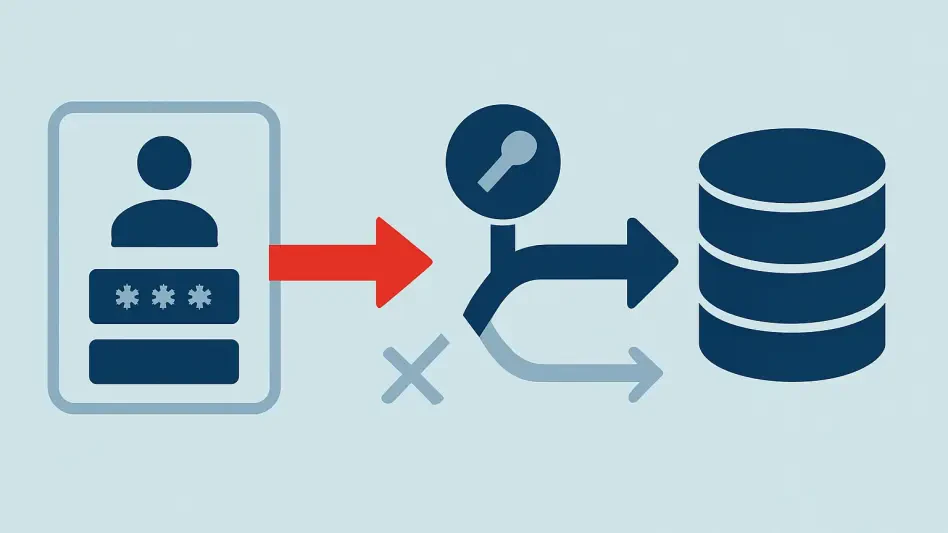

A standout feature of Chimera is its commitment to privacy, a cornerstone of its design that aligns with the industry’s growing emphasis on secure data handling. By allowing simulations to run locally without exposing sensitive organizational information, the tool ensures that training and testing can occur without compromising confidentiality. This privacy-conscious approach is particularly crucial in an era where data breaches can have catastrophic consequences, both financially and reputationally. As models trained on ChimeraLog exhibit stronger generalization across different scenarios, it becomes clear that realistic, diverse data can enhance detection without sacrificing security. This balance positions Chimera as a model for how technology can advance cybersecurity while respecting the boundaries of data protection.

Looking back, the journey of Chimera’s development reflected a deep understanding of the dual need for innovation and caution in cybersecurity. The focus on local deployment and customized datasets addressed long-standing concerns about privacy, while the tool’s ability to simulate intricate attack scenarios pushed the boundaries of what detection systems could achieve. The performance improvements seen in models trained on ChimeraLog underscored the value of investing in realistic training environments. Moving forward, the cybersecurity community should prioritize integrating such tools into standard practices, exploring ways to scale their application across varied industries. Additionally, fostering collaboration to refine and expand these simulations could ensure that defenses remain robust against the ever-changing landscape of insider threats, offering a sustainable path to stronger, more adaptive security frameworks.