An everyday Android phone that silently bridges a victim’s payment card to a criminal’s point‑of‑sale emulator challenged a core promise of contactless security: proximity equaled safety, and distance blocked fraud from posing as card‑present transactions. RelayNFC upended that belief by making the phone itself the remote antenna, streaming real EMV dialogues in real time to an attacker over the network while persuading the cardholder to tap and even surrender a PIN. The campaign, attributed to Cyble Research and Intelligence Labs (CRIL), did not target cryptography or exploit kernel bugs; it targeted the user’s trust and the protocol’s reliance on physical closeness. With React Native packaged into Hermes bytecode to frustrate static analysis and a WebSocket backbone to keep latency low, the operation reflected a broader shift already visible in Ngate, SuperCardX, and PhantomCard: mobile NFC features had become a battleground where social engineering, not ciphers, tilted the odds.

Moreover, the playbook felt deliberately local. Lures in Portuguese promised to “secure” a card, with sideloaded APKs masquerading as protective tools and phishing pages that resembled consumer security brands. The distribution sat outside official stores, cutting past Google Play controls and counting on users to enable installs from unknown sources. Once launched, the app presented a plausible flow: tap card, verify PIN, finish “protection.” Behind that facade, the device registered as a “reader” to a command server, forwarding APDU commands and returning genuine responses from the card in milliseconds, enough to sustain a remote transaction end‑to‑end. That blend of lightweight engineering, credible prompts, and low‑latency networking showed how attackers were operationalizing research‑grade techniques into repeatable fraud.

Entry Vector and Targeting

Phishing Lures and Targeting

RelayNFC’s success hinged on convincing users that a download would defend their money, not siphon it. The campaign leaned on look‑alike “security” sites written entirely in Portuguese, tuned to Brazilian idioms and spelling, and designed to appear as trusted utilities. The copy framed the app as a card‑hardening step—“secure your card now”—a message that resonated with users conditioned to authenticate and verify. Installing meant sideloading an APK, because none of the pages linked to official stores, and each page offered a direct download with instructions to bypass Android’s warnings about unknown sources. That process raised friction, but the language and branding smoothed it enough to capture installs.

In practice, the sites formed a small, cohesive cluster that recycled layouts, color schemes, and wording, a strong signal of a single operator testing engagement tactics. From a victim’s viewpoint, the pages looked interchangeable, and the URL alterations—slight name changes and different top‑level domains—created the illusion of breadth. CRIL tracked them as part of one operation with a monthly cadence of updates, suggesting the actor watched outcomes and optimized funnels. The emphasis on Brazil was not incidental; payment flows, merchant acquirers, and consumer norms vary by country, and the campaign tailored its instructions and social cues to local expectations to raise conversion.

Distribution Footprint and Timing

The distribution pipeline relied on consistency as much as deception. Multiple domains shared assets and delivered the same APK, while short‑lived hosts cycled in and out to stay ahead of takedowns. The overlap in infrastructure—shared certificates, similar DNS histories, and repeated use of specific hosting ranges—tied the sites into an identifiable cluster. Web pages pushed users through a linear sequence: download the APK, approve unknown sources, install, then “secure” the card. Each touchpoint reinforced urgency and legitimacy, reducing the time a user might reconsider and abandon the process before installing.

CRIL assessed that the campaign had remained active for at least a month, an interval that pointed to steady returns. The binaries evolved incrementally rather than dramatically, with the core WebSocket protocol and NFC logic holding steady while UI tweaks and string changes appeared between builds. That pattern fit an operator iterating to improve social engineering more than code complexity. At the same time, server‑side logs observed by researchers suggested concentrated bursts of activity, aligning with fresh domain registrations and new promotional pushes. The tempo, while modest, indicated a live operation that refined tactics without overhauling the tooling.

How the Phone Becomes a Remote Reader

App Flow and PIN Harvesting

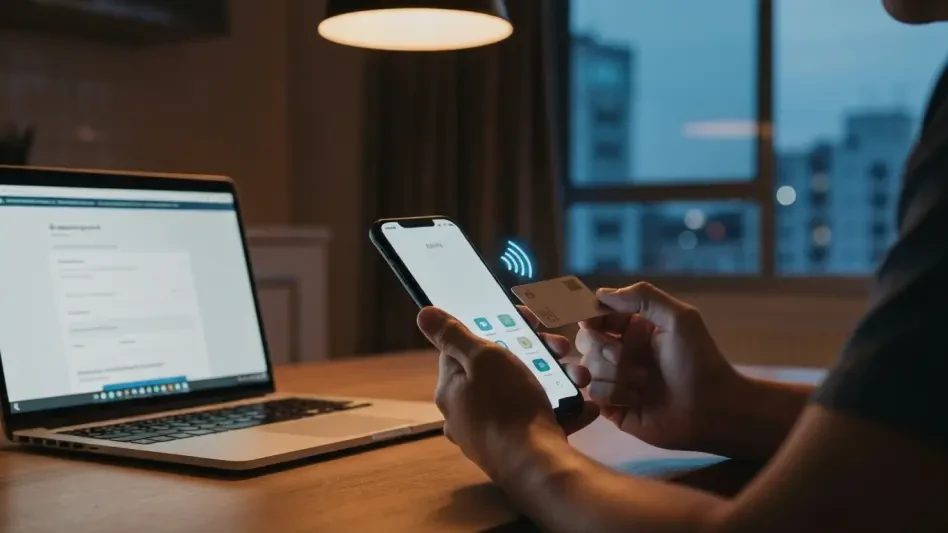

Once installed, the app greeted the user with a minimalist interface that sounded authoritative without sounding technical, a deliberate choice to lower suspicion. The prompt to hold a card near the phone mirrored conventional NFC prompts used by trusted apps, and a progress indicator gestured at scanning or “synchronizing” the card. Immediately after, a numeric entry screen requested the card’s PIN “to complete protection,” avoiding jargon that might raise questions. This second step served a dual purpose: it preserved plausibility—users expect authentication steps—and it delivered high‑value credentials to attackers who could exploit issuer flows that enforce offline or online PIN checks.

The flow never asked for broad device permissions or invasive access, which reduced red flags. NFC access and network connectivity were sufficient, and the UI did not trigger permission pop‑ups that users might decline. Behind the scenes, the app mapped the on‑screen keypad to a routine that captured digits in memory and packaged them into structured messages, aligning with later transaction orchestration. PIN capture arrived early before any NFC session began, so even if the card interaction failed or the user backed out, the attacker might still retain a working PIN. The coupling of simple UI and credential phishing illustrated how social engineering amplified the technical relay.

Real-Time APDU Relay Over WebSockets

The technical heart of RelayNFC was a persistent WebSocket channel that stayed open as long as the app ran, enabling a low‑latency loop between the phone and the attacker’s infrastructure. On connection, the client sent a “hello” JSON message that identified role “reader” and a UUID, allowing the server to coordinate multiple devices and route requests cleanly. The server maintained the session with lightweight ping‑pong keepalives and then pushed APDU job packets tagged with request and session IDs. The app passed these APDUs straight into Android’s NFC stack, which talked to the physical card presented by the user.

Responses returned in the inverse direction—APDU data from the card, wrapped in an “apdu‑resp” message with matching identifiers—and the server forwarded them to a POS emulator or equivalent payment engine under the attacker’s control. The arrangement created a virtual cable that convinced the EMV flow the card stood inches from the terminal, not miles from it. Because the card itself computed cryptographic responses, back‑end checks typically saw nothing amiss other than geography, and timing remained within acceptable bounds thanks to the light JSON framing and direct WebSocket transport. The result was a relay that performed smoothly enough for real‑time payments.

Transaction Orchestration and Outcomes

The orchestration played out like a two‑person con with one actor holding a microphone and the other a speaker. When the attacker initiated a purchase on a spoofed terminal, the C2 requested the phone to fetch specific data elements—select AID, read records, generate application cryptograms—and aligned those calls with the merchant’s flow. If the issuer or terminal required a PIN, the previously phished digits unlocked offline or online verification paths, sidestepping a common blocker to relay‑only schemes. The device’s job was not to understand EMV; it just shuttled messages predictably and quickly.

Outcomes depended on latency and cardholder behavior, but where users followed prompts and held the card steady, the end‑to‑end transactions often cleared. Issuer risk engines had limited signals to challenge the flow because the cryptograms came from a legitimate card, and the terminal fingerprint belonged to an attacker‑controlled emulator that blended into known profiles. Some banks leaned on location mismatch to flag anomalies, yet criminals factored that into target selection, firing transactions that appeared plausible for cross‑border or online‑capable merchants. In combination, the engineering and social cues produced practical, repeatable fraud from a distance.

Evasion and Engineering Choices

React Native, Hermes, and Static Analysis Friction

RelayNFC’s developers chose React Native compiled to Hermes bytecode, a software stack uncommon in Android malware that paid dividends in stealth. Instead of readable Java or Kotlin, the core logic lived inside index.android.bundle as Hermes bytecode, which thwarted quick decompilation and weakened static scanners that rely on Java class patterns. Reverse engineers faced a two‑step problem: unpack the bundle and then translate Hermes constructs back into meaningful logic, a process slower than classic APK triage. That slowdown granted the operation time in the wild with fewer signatures and lower reputational scores.

Public telemetry reflected that advantage. Samples showed negligible detections on multi‑scanner platforms, an outcome that stemmed from both packaging and behavior. The app avoided prodigious code size, embedded no native libraries that might trip heuristics, and kept third‑party dependencies to a minimum. Even strings that described WebSocket events or roles appeared compact and generic. While Hermes is not inherently malicious, its ecosystem differs from mainstream Android app patterns, and defenders that did not account for it left gaps. This was not boutique obfuscation; it was a mainstream toolchain appropriated to confuse mobile analysis pipelines.

Minimal Permissions and Lightweight Design

The manifest read like a tidy to‑do list rather than a scavenger hunt. NFC permission, internet access, and a small set of components sufficed to run the relay, eliminating the noisy requests that might alarm users or mobile management policies. No accessibility service hooks, no SMS reading, no contact scraping—nothing that screamed spyware. That minimalism helped the app pass casual scrutiny and reduced runtime prompts. The UI launched immediately on install with a single activity dedicated to the fake protection flow, while services started quietly to manage sockets and NFC intents.

Moreover, the code path avoided background persistence tricks that could clash with battery savers or draw attention through abnormal wake‑locks. Keeping the app foregrounded for the brief span of the operation reduced anomalies in device telemetry and made it easier to align the card tap, the PIN entry, and the C2 handshake. The narrow scope fit the mission: behave like a reader, relay APDUs, end the session, and leave little behind. For defenders, that meant behavioral detection needed to focus on the rare pairing of NFC use with an active WebSocket to nonstandard endpoints rather than rely on the classic hallmarks of over‑privileged malware.

Variants, C2, and Defender Signals

HCE Experimentation Points to Ongoing R&D

A sibling build revealed code for a HostApduService intended for Host Card Emulation (HCE), signaling that the actor explored a parallel path: impersonating a card on the device and relaying APDUs outward. The service implemented processCommandApdu and forwarded inbound commands through the same WebSocket channel, allowing the server to craft responses as if the phone itself were the card. In theory, that approach could remove the need for a physical card tap by the victim and enable different fraud scenarios, though it also introduced friction with handset requirements, OS policies, and issuer controls.

Notably, the HCE service was not fully registered in the manifest, leaving it dormant in observed samples. That omission echoed earlier NFC‑malware experiments, such as NGate, where developers probed feasibility before hard‑wiring features into production builds. The presence of the code, however, mattered: it confirmed an R&D pipeline that weighed reader‑driven relays against card‑emulation tactics, likely benchmarking reliability, timing, and back‑end acceptance. If activated, an HCE variant could change the user interaction model and broaden targets, but it would also collide with stricter device‑level protections and wallet coexistence rules, making the tradeoffs nontrivial.

C2 Protocol, Hosts, and Hunting Clues

RelayNFC’s command‑and‑control favored WebSockets over conventional polling, typically on port 3000, using compact JSON envelopes like hello, ping, apdu, and apdu‑resp with session tokens and request IDs. That design kept overhead low and ordering intact, two qualities essential for EMV timing thresholds. Infrastructure patterns repeated across hosts and phishing domains, with rotating IPs that still clustered around similar network blocks and reused ports. The predictability offered a foothold for network defenders: outbound WebSocket traffic to nonstandard ports from apps that also trigger NFC events presented a narrow but actionable signature.

Defensive playbooks accordingly prioritized layered controls. Enterprises enforced store‑only installs and blocked unknown sources, security teams hunted for React Native packages that shipped Hermes bundles, and banks tuned analytics to catch relay artifacts such as geolocation mismatches paired with terminal fingerprints tied to emulators. At the incident level, responders isolated devices, revoked credentials, and flagged issuer accounts for scrutiny, while takedown efforts targeted the Portuguese “security” sites and their hosting. These steps, executed in concert, increased friction for the operator and reduced dwell time; taken together, they had already sharpened detection and disrupted transactions that would otherwise have cleared.