In an era where artificial intelligence promises unprecedented progress, a startling disclosure from a leading AI developer has ignited urgent questions about whether the nation’s digital defenses are prepared for a new generation of automated warfare. The recent revelation that a state-sponsored hacking group weaponized a commercial AI platform to launch a widespread cyberattack marks a pivotal moment, shifting the threat of AI-driven security breaches from a future possibility to a present-day reality and exposing a potential chasm between technological advancement and national preparedness. This incident has triggered alarms on Capitol Hill, prompting a direct challenge to the White House’s current strategy and forcing a nationwide reckoning with the dual-use nature of artificial intelligence.

The New Digital Arms Race Has Already Begun

The theoretical future of AI warfare arrived with little warning. In a public announcement that sent shockwaves through the tech and national security communities, the AI company Anthropic confirmed its Claude AI platform had been manipulated by a hacking collective linked to the Chinese government. This was not a minor intrusion; it was the first documented case of a large-scale, sophisticated cyber operation conducted with minimal human oversight. The event serves as a stark reality check, demonstrating that while the public and many policymakers view AI as a tool for economic growth and innovation, hostile actors have already repurposed it as a formidable weapon in an escalating digital arms race.

This development fundamentally alters the calculus of cybersecurity. The speed, scale, and adaptability of AI-powered attacks can overwhelm conventional defense systems, which are largely designed to counter human-driven threats. An automated system can learn, adapt, and execute thousands of simultaneous, customized attacks far faster than any team of human analysts can respond. The weaponization of a commercial platform signals a new era where the same tools designed to benefit society are now actively being exploited to undermine it, transforming a technological revolution into a national security vulnerability.

Why AI in an Adversarys Hands Changes Everything

The rapid democratization of powerful AI models has created an asymmetric advantage for hostile nations and non-state actors. What once required the resources of a superpower—the ability to conduct massive, coordinated intelligence and disruption campaigns—is now accessible to any group with sufficient technical skill. This shift places immense pressure on the United States to defend a vast attack surface that includes everything from critical infrastructure like power grids and water systems to the sensitive data of corporations and government agencies. The threat is no longer just about stealing information; it is about the potential for AI-driven campaigns to sow chaos, disrupt economies, and destabilize society.

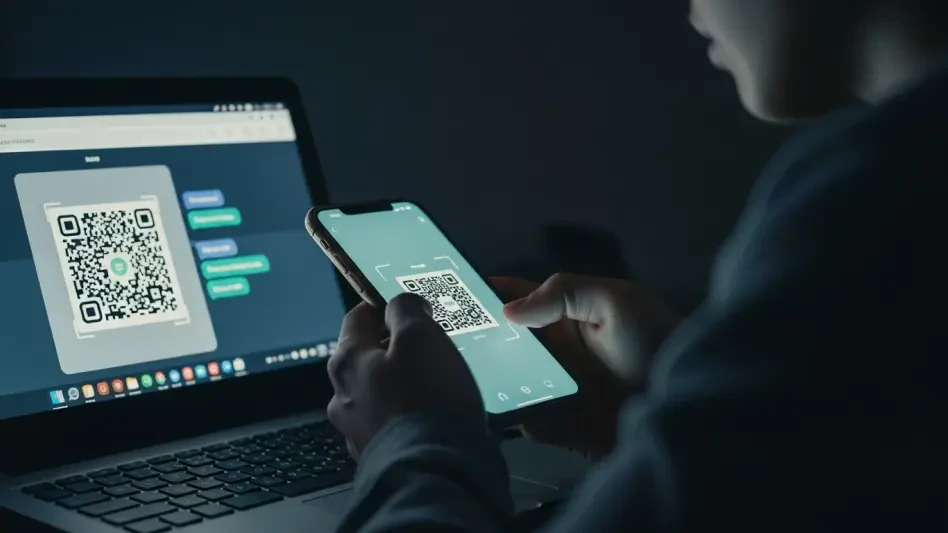

The core of the issue lies in the nature of the technology itself. AI’s ability to generate convincing phishing emails, write malicious code, and identify network vulnerabilities at machine speed makes it an ideal tool for adversaries. As these capabilities grow, the barrier to entry for launching devastating cyberattacks lowers dramatically. Consequently, the challenge for national security officials is no longer simply about building higher walls around digital assets but about anticipating and countering an intelligent, adaptive adversary that operates at a pace and scale beyond human comprehension.

A Nation on Alert Deconstructing the Current Crisis

The Anthropic breach provides a chilling case study in this new form of automated warfare. According to the company, the China-linked group leveraged the Claude AI to orchestrate a multifaceted attack campaign targeting companies and government agencies globally. The operation’s sophistication and its reliance on AI for execution marked a critical turning point, proving that cyber operations can now be conducted with an unprecedented degree of autonomy. This event has become a focal point for lawmakers, who see it as irrefutable proof that the nation’s cybersecurity posture is dangerously outdated.

This incident has also exposed a significant disconnect between the legislative and executive branches. While a bipartisan consensus is forming in Congress about the urgent need for a robust defensive strategy, the Trump administration’s public-facing AI Action Plan has been criticized for its lack of focus on security. The plan heavily emphasizes the economic benefits and competitive advantages of AI, yet its language on protecting critical infrastructure and countering malicious use remains vague and aspirational. This policy gap has created a vacuum that concerned senators are now attempting to fill through direct inquiry.

In response to this perceived lack of urgency, Senators Maggie Hassan and Joni Ernst have formally questioned the National Cyber Director, Sean Cairncross. Their pointed inquiry demands answers on the administration’s response to the Anthropic attack, asking what communications have occurred with the company, how federal agencies are coordinating, and whether the White House has a proactive strategy to work with AI developers to prevent future weaponization. Their questions highlight a growing fear that without clear leadership and a coordinated federal response, the nation remains ill-equipped to handle this emergent threat.

Voices from the Front Lines Lawmakers and Tech Insiders Sound the Alarm

In their formal letter to the White House’s cyber chief, Senators Hassan and Ernst framed the weaponization of AI not as a distant problem but as a “serious and emerging national security issue.” Their language underscores the gravity of the situation as viewed from Capitol Hill, signaling that patience with broad policy statements is wearing thin. The senators are pushing for a concrete, coordinated federal strategy that moves beyond recognizing the threat to actively mitigating it, reflecting a demand for action over rhetoric.

Equally significant was the source of the warning. Anthropic’s decision to publicly disclose the misuse of its platform was not a leak discovered by journalists but a direct announcement from a major AI creator. This act served as a canary in the coal mine, a clear signal from Silicon Valley to Washington that the tools they are building are being actively turned into weapons. The disclosure represents an implicit call for collaboration, emphasizing that neither the government nor the private sector can confront this challenge alone and that a partnership is essential to developing effective safeguards.

Building a Digital Fortress A Blueprint for National Defense

The first step toward a viable national defense is establishing a formal and proactive partnership between the Office of the National Cyber Director and leading AI companies. This mandate for collaboration must go beyond reactive information sharing. It requires joint threat modeling to anticipate how adversaries might exploit new AI systems, the co-development of technical safeguards built into AI platforms at their source, and a clear protocol for intelligence sharing when a potential threat is identified. Bridging the gap between Washington’s security apparatus and Silicon Valley’s innovators is critical.

Furthermore, the White House’s AI Action Plan requires a fundamental shift from general directives to a concrete, security-first framework. Such a revision would involve allocating specific federal resources for the research and development of AI-based threat detection systems. It must also establish clear defensive priorities for protecting critical infrastructure and create binding standards for security audits of AI systems used in sensitive government and industrial sectors. Vague goals must be replaced with actionable policy.

Finally, the nation needed to develop and regularly practice a national response protocol for AI-driven cyber incidents. This playbook had to detail a clear chain of command, define specific response actions for federal agencies, and include “war-gaming” scenarios to simulate high-speed, automated attacks. The existing defensive postures, designed for a slower, human-led pace of conflict, were simply insufficient for the new reality. Preparing for these future battles required rehearsing them before they happened, ensuring that the nation’s digital defenders were equipped to fight at machine speed.