In today’s fast-paced digital landscape, where technology intertwines with nearly every aspect of life, a chilling reality has emerged: cybercriminals are increasingly relying on social engineering to exploit human trust rather than technical vulnerabilities, marking a profound shift in cyber threats. The latest insights from a comprehensive global incident response report by a leading cybersecurity firm reveal that over one-third of cyber incidents now stem from these deceptive tactics, based on an analysis of more than 700 cases worldwide. This startling statistic underscores a seismic shift in the threat landscape, where attackers manipulate psychology to bypass even the most robust defenses. As this critical challenge unfolds, understanding the nuances of human-centered attacks becomes not just a priority but a necessity for organizations aiming to protect their assets in an era defined by digital connectivity and ever-evolving risks.

Social engineering stands as a stark reminder that technology alone cannot safeguard against cyber threats. Unlike conventional attacks that exploit software glitches or hardware flaws, these strategies target individuals through cunning deception, impersonation, or coercion. From seemingly harmless phishing emails to sophisticated fake system alerts, attackers continuously devise new methods to extract sensitive data or gain unauthorized access. This pivot from purely technical exploits to manipulating human behavior marks a profound transformation in how cyber threats manifest, positioning social engineering as a dominant entry point for breaches and highlighting the urgent need for adaptive, people-focused defenses.

Understanding Social Engineering in 2025

The Core of Human-Centered Attacks

Social engineering, at its essence, is a calculated tactic that preys on human psychology, leveraging trust and emotional responses to deceive individuals into compromising security. Distinct from traditional cyberattacks that probe for weaknesses in code or infrastructure, this approach directly targets people, often exploiting their willingness to help or their fear of consequences. Attackers might pose as a trusted colleague requesting urgent access or craft urgent messages that prompt rash decisions. By exploiting inherent social behaviors, such tactics bypass even the most fortified systems, making them a uniquely dangerous threat in the cybersecurity realm. The focus on human error rather than technical flaws reveals why organizations must rethink their defensive posture to address this deeply personal vector of attack.

This human-centric nature of social engineering also means that no amount of software updates or firewalls can fully mitigate the risk without addressing behavioral factors. Attackers capitalize on everyday interactions, whether through a phone call mimicking a help desk or an email mimicking a superior’s tone, to extract credentials or install malware. The subtlety of these interactions often renders them undetectable until significant damage is done. As a result, the emphasis must shift toward educating employees about recognizing suspicious patterns and fostering a culture of skepticism about unsolicited requests. Only by understanding the psychological underpinnings of these attacks can defenses evolve to counter the manipulation of trust that lies at their core.

Prevalence and Impact

A staggering 36% of cyber incidents analyzed in a recent global report trace their origins to social engineering, drawing from a pool of over 700 cases across diverse regions and sectors. This figure illustrates the pervasive nature of these attacks, which have become a cornerstone of cybercriminal strategy due to their effectiveness and ease of execution. The consequences are far-reaching, with attackers gaining access to critical systems or sensitive information through seemingly innocuous interactions. This prevalence signals a broader trend where human manipulation often outpaces technical exploits as the preferred method for breaching organizational defenses, demanding a reevaluation of security priorities.

Beyond sheer numbers, the impact of social engineering attacks is profoundly disruptive, with over 50% of incidents resulting in the exposure of sensitive data, often leading to reputational and financial losses. Additionally, many cases cause operational interruptions, halting business processes or services and compounding the damage. The ripple effects can destabilize entire organizations, as stolen data fuels further attacks or extortion schemes. This dual threat of data loss and operational chaos underscores why social engineering is not a peripheral issue but a central challenge that requires immediate and comprehensive action to mitigate its devastating potential.

Evolving Tactics and Techniques

Diverse Methods Beyond Phishing

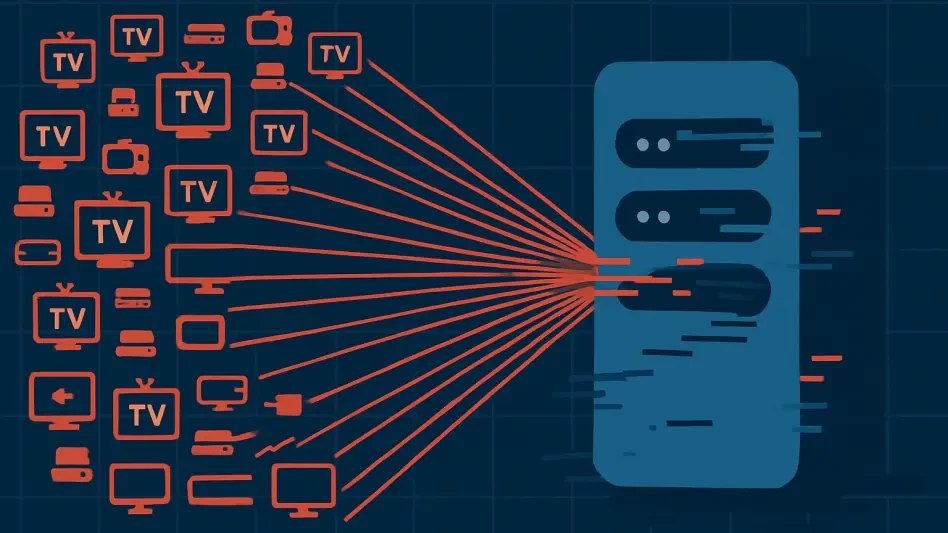

While phishing remains a well-known tactic, over one-third of social engineering incidents now employ a broader array of methods that exploit user behavior across multiple platforms. Techniques such as search engine optimization (SEO) poisoning manipulate search results to direct users to malicious sites, while fake system prompts trick individuals into downloading harmful software under the guise of urgent updates. Help desk manipulation, where attackers impersonate employees to gain insider access, further illustrates the creativity of these schemes. This diversity in approach highlights how cybercriminals adapt to evolving user habits and technological environments, making it harder for traditional defenses to keep pace with the shifting threat landscape.

The expansion of tactics beyond email-based phishing to include browser-based deceptions and social media scams reveals the multi-channel nature of modern social engineering. Attackers exploit the trust users place in familiar interfaces, crafting alerts or messages that appear legitimate at first glance. These methods often target moments of distraction or urgency, increasing the likelihood of success. As platforms for interaction multiply, so do the opportunities for deception, emphasizing the need for vigilance across all digital touchpoints. Organizations must broaden their monitoring and training efforts to cover these varied attack vectors, ensuring that users are equipped to spot and resist manipulation in any form.

Targeted vs. At-Scale Deceptions

Social engineering tactics often fall into two distinct categories: highly targeted, high-touch compromises and broad, at-scale deceptions designed to ensnare large numbers of victims. High-touch attacks involve personalized efforts, such as impersonating internal staff or using stolen identity data to manipulate help desks for real-time privilege escalation. These bespoke strategies rely on detailed reconnaissance to craft convincing narratives that deceive specific individuals, often those with access to critical systems. The precision of such attacks makes them particularly dangerous, as they exploit tailored trust relationships to achieve deep penetration into organizational networks.

Conversely, at-scale deceptions aim to cast a wide net, using tactics like ClickFix scams or fake browser prompts to trick masses of users into compromising their devices. These methods prioritize volume over specificity, banking on the statistical likelihood that a small percentage of targets will fall for the ruse. By leveraging common user behaviors, such as clicking on urgent alerts, attackers maximize their reach with minimal effort. This dual approach—balancing personalized strikes with widespread traps—demonstrates the versatility of social engineering, as cybercriminals tailor their strategies to exploit both individual vulnerabilities and collective tendencies for maximum impact.

Role of Technology and AI

AI as an Amplifier of Threats

Emerging technologies, particularly artificial intelligence (AI), are dramatically enhancing the potency of social engineering attacks, with 23% of incidents incorporating voice-based or callback techniques that sound alarmingly authentic. Generative AI enables cybercriminals to create highly tailored lures, crafting messages or audio that mimic trusted contacts with eerie precision. This technological leap allows for deception at an unprecedented scale, as attackers can automate the production of convincing content that exploits personal trust. The result is a growing difficulty in distinguishing legitimate communications from malicious ones, posing a significant challenge to even the most vigilant users.

The scalability afforded by AI means that what once required significant manual effort can now be executed en masse with minimal resources. Voice spoofing, for instance, can replicate a CEO’s tone to coerce an employee into transferring funds, while AI-generated emails can bypass spam filters through nuanced language. Detection systems struggle to keep up with these sophisticated forgeries, often failing to flag subtle anomalies. As AI continues to evolve, the potential for more intricate and widespread social engineering schemes grows, necessitating advanced countermeasures that can analyze behavioral patterns and flag deviations in real-time to prevent breaches.

Financial Motivations

A striking 93% of social engineering attacks are driven by financial gain, underscoring the profit motive behind these deceptive practices. Whether through ransomware demands following a breach or direct theft of funds via fraudulent transfers, the monetary incentive fuels the persistence and innovation of cybercriminals. This overwhelming focus on financial returns explains why sectors handling valuable data or transactions are frequent targets, as the potential payoff justifies the effort. The lure of quick, substantial gains keeps social engineering at the forefront of cybercrime strategies, posing a relentless threat to global economies.

What makes these attacks particularly appealing to threat actors is the low cost and minimal infrastructure required to execute them. Unlike complex technical exploits that demand advanced coding skills or expensive tools, social engineering often relies on simple tools like email or phone calls, paired with psychological manipulation. This accessibility lowers the barrier to entry for would-be attackers, enabling even novices to achieve significant results. As long as financial rewards remain high and risks low, social engineering will continue to attract a wide range of cybercriminals, highlighting the need for defenses that disrupt the economic incentives driving these attacks.

Industry Impacts and Vulnerabilities

Most Affected Sectors

Certain industries bear a disproportionate burden from social engineering attacks, with manufacturing leading at 15% of incidents, followed by professional and legal services at 11%, and financial services alongside wholesale and retail at 10% each. These sectors are prime targets due to their extensive digital interactions and the high value of the data they manage, making them lucrative for attackers seeking substantial returns. Manufacturing, for instance, often involves complex supply chains with multiple access points ripe for exploitation, while financial services hold sensitive client information that can be monetized through fraud or extortion. This distribution reveals a calculated focus by cybercriminals on industries where human error can yield outsized impacts.

The targeting of these sectors also reflects broader trends in digital dependency, where increased connectivity creates more opportunities for deception. Employees in high-pressure environments, such as legal services, may be more susceptible to urgent-sounding scams that exploit time-sensitive decisions. Similarly, retail’s vast customer base offers a large pool for at-scale deceptions like fake promotions. The concentrated impact on these industries signals a need for tailored defenses that account for sector-specific workflows and risks. Without targeted interventions, these fields will remain vulnerable to the relentless ingenuity of social engineering tactics.

Detection Challenges

One of the most pressing obstacles in combating social engineering is the persistent issue of detection, with 13% of critical alerts either going unnoticed or being misclassified as benign. This gap creates exploitable windows for attackers to manipulate identity recovery workflows or move laterally within networks, often undetected for extended periods. Alert fatigue among security teams, overwhelmed by a deluge of notifications, exacerbates the problem, as does the subtlety of social engineering tactics that mimic legitimate activity. These missed signals allow breaches to escalate, turning minor incursions into major crises before a response can be mounted.

Addressing these detection challenges requires a fundamental shift in how alerts are prioritized and monitored. Current systems often fail to contextualize human behavior, missing anomalies in login patterns or unusual access requests that could indicate impersonation. Enhancing visibility into identity-based threats and lateral movement within networks is critical to closing these gaps. Additionally, reducing alert fatigue through automation and refined filtering can ensure that security personnel focus on genuine threats. Until detection mechanisms evolve to match the sophistication of social engineering, organizations will struggle to contain the damage inflicted by these stealthy attacks.

Defensive Strategies for the Future

Shifting to Systemic Resilience

To counter the pervasive threat of social engineering, a paradigm shift from mere awareness to systemic resilience is imperative, emphasizing robust identity security as a cornerstone of defense. Implementing analytics to detect abnormal logins and credential misuse early can prevent unauthorized access before it escalates into a full breach. Adopting a Zero Trust security model, which enforces least privilege and conditional access policies, further limits the potential damage by restricting attacker movement within systems. This proactive approach moves beyond reactive training to embed security into the fabric of organizational processes, ensuring that human vulnerabilities are addressed at a structural level.

Building systemic resilience also involves integrating advanced technologies like Identity Threat Detection and Response (ITDR) capabilities to monitor and respond to suspicious activities in real-time. These tools can identify deviations from normal user behavior, flagging potential impersonation attempts or compromised accounts swiftly. Complementing this with regular audits of access controls ensures that outdated permissions do not become entry points for attackers. By focusing on early intervention and continuous verification, organizations can create a security posture that withstands the psychological manipulation at the heart of social engineering, safeguarding both data and operations against evolving threats.

Protecting Human Workflows

Securing human workflows is another critical pillar in defending against social engineering, starting with fortifying help desks through stringent verification processes to thwart impersonation attempts. Attackers often target support staff to gain insider access, exploiting lax protocols or social pressure to bypass security checks. Equipping these teams with clear guidelines and multi-factor authentication for validating identities can significantly reduce risks. Additionally, comprehensive staff training to recognize voice-based scams and other deceptive tactics ensures that employees at all levels act as a first line of defense, capable of spotting and reporting suspicious interactions before they escalate.

Expanding visibility beyond traditional email monitoring to include browsers, DNS activity, and collaboration platforms is equally vital in preventing the spread of malicious links or fake prompts. Many social engineering attacks now exploit these less-guarded channels, embedding threats in seemingly benign notifications or shared documents. Deploying tools that track and analyze activity across these mediums can intercept deceptions early, while educating users about safe browsing habits reinforces caution. By prioritizing the protection of human interactions and the digital spaces where they occur, organizations can build a multi-layered defense that counters the diverse and adaptive nature of social engineering threats, ensuring a safer operational environment.