Imagine a digital battlefield where attackers wield weapons that learn, adapt, and rewrite themselves in real time to outsmart even the most advanced defenses. This isn’t science fiction; it’s the alarming reality of today’s cybersecurity landscape, where artificial intelligence is being harnessed by cybercriminals to create malware that’s increasingly difficult to detect and stop. A recent threat intelligence report from a leading tech giant has unveiled a chilling trend: AI is no longer just a tool for crafting deceptive emails or phishing lures but has evolved into a core component of malware development. This shift marks a new era in cybercrime, one where malicious software can dynamically change its behavior during an attack, evading traditional security measures with unsettling precision. As this technology becomes more accessible to threat actors, the implications for digital safety are profound, raising urgent questions about how defenders can keep pace with such relentless innovation.

The Evolution of AI in Cybercrime

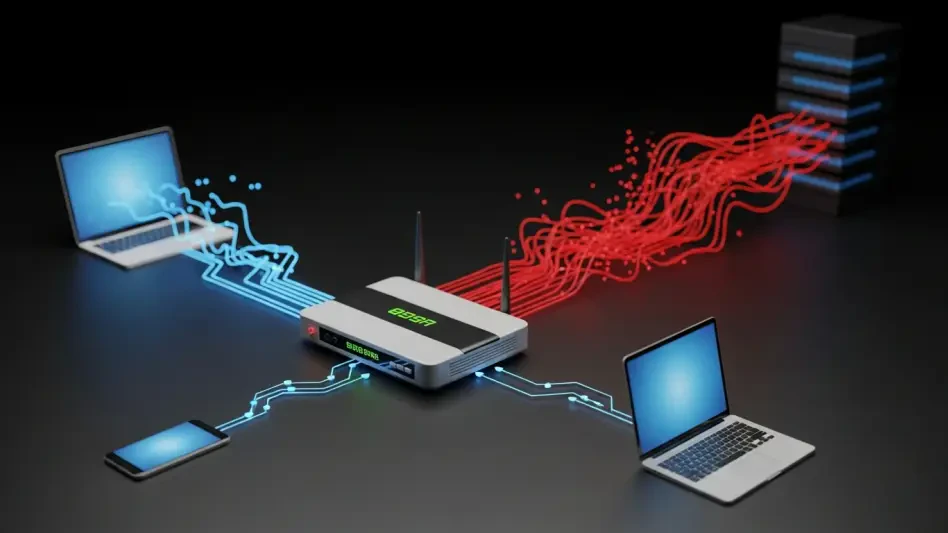

The journey of AI in the hands of cybercriminals has taken a dramatic turn, moving far beyond its earlier, simpler applications. Initially, AI was mostly used to generate convincing phishing messages or automate basic scripting tasks, often dubbed “vibe coding” for its rudimentary nature. However, the landscape has shifted significantly, with threat actors now embedding AI directly into malware to create programs that adapt on the fly. This progression signals a dangerous leap forward, as malware powered by AI can analyze its environment, alter its code, and exploit vulnerabilities without human intervention. Such autonomy makes these threats not just reactive but predictive, capable of staying one step ahead of static defense mechanisms. The sophistication of these tools, even in their early stages, hints at a future where attacks could become nearly impossible to anticipate, pushing the cybersecurity community into uncharted territory with every passing day.

Moreover, this evolution isn’t merely theoretical; it’s already manifesting in real-world scenarios that challenge conventional security paradigms. Reports highlight how AI-driven malware can rewrite its own source code hourly, hiding its presence from antivirus software and embedding itself into critical system areas like startup folders for persistence. This capability isn’t just a minor upgrade—it’s a fundamental rethinking of how malware operates, turning it into a living, breathing entity in the digital realm. While these techniques are still developing, with some components inactive or experimental, their potential to disrupt is undeniable. Defenders are now forced to rethink strategies, moving away from signature-based detection to systems that can spot unusual behaviors across a broader spectrum. The race between attackers and protectors has never been more intense, and AI is clearly tilting the balance toward those with malicious intent.

Emerging AI-Powered Malware Families

Diving deeper into the specifics, several newly identified malware families showcase the terrifying potential of AI in cybercrime. Strains like PROMPTFLUX, PROMPTSTEAL, and others demonstrate innovative tactics that make them stand out from traditional threats. For instance, PROMPTFLUX leverages advanced AI models to regenerate its code at regular intervals, obfuscating its footprint and ensuring it remains undetected by conventional tools. By dynamically creating scripts and placing them in critical system locations, it maintains a persistent hold on infected devices. Although some of its features are still under development, with limited interactions through APIs, the very concept of self-evolving malware is a wake-up call. These early implementations are merely prototypes of what could become widespread, forcing security experts to grapple with threats that don’t follow predictable patterns.

In contrast, another strain, PROMPTSTEAL, linked to sophisticated state-sponsored groups, uses AI to query large language models for generating malicious commands under the guise of harmless applications. Masquerading as an image-generation tool, it discreetly executes data theft operations on Windows systems, crafting scripts that evade detection with eerie precision. This ability to adapt in real time, producing new attack vectors on demand, underscores a critical vulnerability in current defenses: the reliance on static signatures. When malware can disguise itself so seamlessly, traditional methods falter, leaving systems exposed. The diversity of these AI-powered families illustrates not just the ingenuity of attackers but also the urgent need for a paradigm shift in how threats are identified and neutralized. As these tools proliferate, the gap between attacker innovation and defender readiness widens alarmingly.

Broader Applications and Varied Success of AI Exploits

Beyond malware creation, threat actors are finding inventive ways to exploit AI across their operations, with outcomes that vary widely. Some groups have cleverly bypassed safety mechanisms of AI platforms by framing malicious requests as part of benign activities, such as educational exercises or games. For example, a state-linked entity used an AI model to craft deceptive content, build infrastructure, and even develop data theft tools, all while dodging built-in restrictions. This ingenuity highlights how AI’s accessibility can be weaponized, turning a technology meant for progress into a conduit for harm. Such cases reveal the dual nature of AI: a powerful ally for creativity when used ethically, but a devastating weapon when wielded with malicious intent. The adaptability of these approaches keeps security teams on edge, as each exploit uncovers new weaknesses to address.

However, not all attempts at exploiting AI yield success, offering glimmers of hope amidst the chaos. Certain threat groups have stumbled in their efforts, inadvertently exposing operational details while interacting with AI systems for custom malware development. These missteps have allowed defenders to disrupt activities before significant damage occurred, proving that attackers aren’t infallible. Nevertheless, even these failures serve as learning opportunities for cybercriminals, who refine their tactics with each attempt. The broader trend points to an escalating reliance on AI for everything from lure creation to infrastructure building, pushing the boundaries of what’s possible in cyber warfare. As these diverse applications grow, so too does the complexity of defending against them, demanding that security measures evolve from rigid protocols to flexible, behavior-focused strategies capable of countering unpredictable threats.

Shaping the Future of Cybersecurity Defenses

Looking ahead, the rise of AI-driven malware demands a complete overhaul of how cybersecurity defenses are designed and deployed. Traditional tools, reliant on known code signatures, are increasingly obsolete against threats that morph in real time. Instead, the focus must shift toward solutions that detect anomalous behaviors across systems, identifying patterns that deviate from the norm rather than matching predefined threats. This transition won’t be easy—behavior-based detection requires vast data analysis and machine learning capabilities of its own, creating a new arms race where AI is both the problem and the potential solution. Security professionals must adapt quickly, integrating advanced technologies to predict and counter attacks before they fully manifest, a daunting but necessary endeavor in this rapidly changing landscape.

Furthermore, proactive measures taken in response to emerging threats like PROMPTFLUX, such as disabling associated assets, show that preemptive action can mitigate risks, even if only temporarily. Yet, no single malware strain operates in isolation; additional exploitation methods are often needed to compromise systems fully. This interconnected nature of modern attacks means that defenders must adopt a holistic approach, addressing not just individual threats but the entire ecosystem of tools and tactics employed by cybercriminals. Collaboration across industries, sharing intelligence on AI exploits, becomes vital to staying ahead. By fostering innovation in defensive AI and emphasizing adaptability, the cybersecurity community can build resilience against these evolving dangers, ensuring that digital environments remain secure even as attackers wield ever-more-sophisticated weapons.

Reflecting on a Critical Turning Point

Reflecting on the past landscape of cyber threats, the integration of AI into malware creation stood as a pivotal moment that reshaped the battlefield. Strains like PROMPTFLUX and PROMPTSTEAL, with their ability to adapt dynamically, underscored a level of sophistication that caught many defenders off guard. Their emergence marked a departure from static threats, challenging the very foundation of traditional security tools. Google’s efforts to disrupt associated assets provided temporary relief, but the broader trend revealed a persistent and growing challenge. Looking back, the dual role of AI—as both a weapon for attackers and a shield for protectors—highlighted the nuanced balance that defined this era. As the cybersecurity community navigated those turbulent times, the necessity for innovative, behavior-based defenses became undeniable, setting the stage for a future where adaptability was no longer optional but essential.