Beneath the surface of the internet lies a hidden realm known as the dark web, accessible only through specialized software and long associated with anonymity and illicit dealings. This shadowy digital space, originally a refuge for political dissenters and privacy advocates, has transformed dramatically into a sophisticated hub for criminal enterprises. Artificial intelligence (AI) has emerged as a powerful catalyst in this evolution, amplifying the scale, efficiency, and stealth of cybercrime. From self-evolving malware to hyper-realistic deepfakes, AI equips malicious actors with tools that challenge even the most advanced cybersecurity defenses. Meanwhile, law enforcement struggles to keep pace in a technological arms race where innovation often benefits the wrong side. This article delves into the profound ways AI is reshaping the dark web, turning it into a more dangerous and complex landscape for global security. The stakes are higher than ever as the balance between technological progress and exploitation hangs in a delicate, precarious state.

Unleashing New Tools for Digital Crime

The integration of AI into cybercrime on the dark web marks a significant leap in the sophistication of illicit activities. Advanced algorithms, such as generative adversarial networks and reinforcement learning, enable malware and ransomware to adapt dynamically, evading traditional antivirus solutions with alarming ease. These AI-driven threats can analyze systems in real time, identifying vulnerabilities and deploying tailored attacks that maximize damage. Ransomware, in particular, has become a precise weapon, with AI pinpointing high-value targets and encrypting data with devastating accuracy. Even the negotiation process has been automated, as AI chatbots anonymously pressure victims into paying ransoms while shielding the perpetrators. This technological edge transforms once-static threats into fluid, intelligent attacks that continuously outmaneuver conventional defenses, posing a dire challenge to individuals, businesses, and governments trying to safeguard sensitive information in an increasingly hostile digital environment.

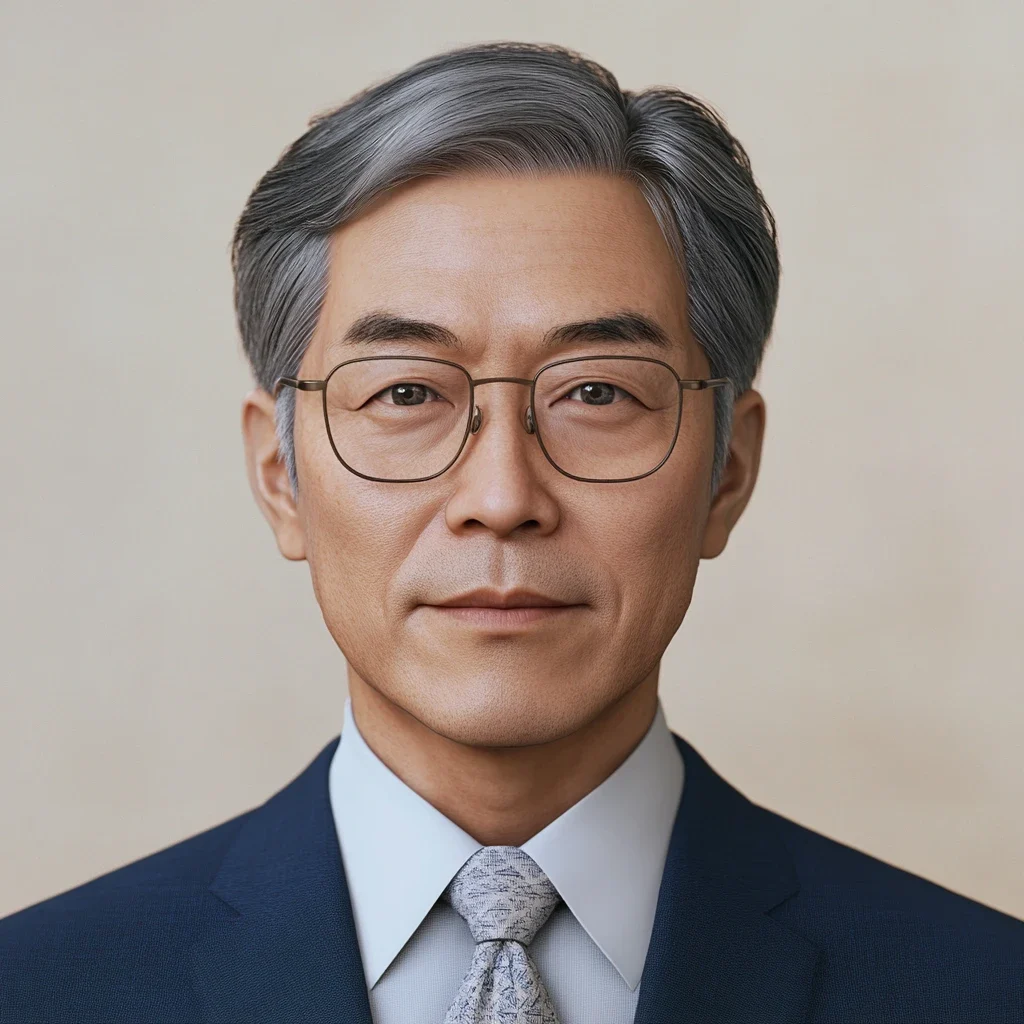

Another chilling innovation fueled by AI on the dark web is the proliferation of deepfake technology, which generates startlingly realistic audio and video forgeries. Malicious actors exploit these tools to orchestrate fraud, often impersonating corporate leaders in business email compromise schemes to trick employees into transferring funds or sharing confidential data. Deepfakes also undermine biometric security by creating synthetic identities capable of bypassing facial recognition systems. The implications extend beyond financial loss, as fabricated content can spread misinformation or defame individuals on a massive scale. This capability to manipulate reality with such precision heightens the risks of identity theft and erodes trust in digital interactions. As deepfake tools become more accessible through dark web marketplaces, the potential for widespread deception grows, leaving both public and private sectors scrambling to develop countermeasures against a threat that blurs the line between fact and fiction in unprecedented ways.

Tailored Attacks and the Business of Crime

Phishing attacks, a long-standing tactic in the cybercriminal arsenal, have reached new levels of deception thanks to AI enhancements on the dark web. By harvesting data from social media platforms and previous breaches, AI crafts highly personalized messages that exploit specific vulnerabilities of targeted individuals. These schemes often appear as legitimate communications, tricking even tech-savvy users into divulging passwords or financial details. Adding to the deception, AI-powered conversational bots simulate human interaction, engaging victims in seemingly authentic dialogues to extract sensitive information. The dark web serves as a fertile ground for distributing these advanced phishing kits, enabling attackers to scale their operations with terrifying efficiency. As a result, the success rate of such scams has surged, amplifying the financial and personal toll on victims while highlighting the urgent need for improved digital literacy and stronger protective measures across all sectors of society.

Beyond individual attack methods, the dark web has evolved into a thriving business ecosystem through Cybercrime-as-a-Service (CaaS) platforms. These subscription-based services offer a range of illicit tools, from botnets and DDoS attack capabilities to AI-driven software like FraudGPT, designed specifically for creating scams and malware. This model drastically lowers the barrier to entry, allowing even those with minimal technical skills—often referred to as script kiddies—to execute complex cyberattacks. The professionalization of this underground economy is striking, with platforms mimicking legitimate businesses by providing customer support, user reviews, and tiered pricing plans. Such accessibility democratizes cybercrime, enabling a broader pool of malicious actors to participate in high-impact schemes. This commercialization not only increases the volume of attacks but also complicates efforts to dismantle criminal networks, as the infrastructure supporting these services becomes more resilient and adaptive to law enforcement interventions over time.

Battling Shadows with Technology

Law enforcement agencies, including global entities like Interpol and Europol, are harnessing AI to counter the rising tide of dark web crime. Advanced algorithms assist in monitoring hidden forums, tracking cryptocurrency transactions often used for illicit payments, and analyzing vast datasets to identify emerging threats before they escalate. Predictive analytics play a crucial role, helping authorities anticipate criminal patterns and disrupt operations proactively. However, the effectiveness of these efforts is constantly undermined by the rapid adaptability of cybercriminals, who employ counter-strategies to evade detection. The dark web remains a murky battleground where technology serves as both a shield for defenders and a sword for attackers, creating a dynamic where neither side can claim a definitive upper hand. This ongoing struggle underscores the complexity of securing a digital space that thrives on anonymity and operates beyond conventional jurisdictional boundaries, testing the limits of international cooperation.

Cybercriminals on the dark web are not standing still; they actively develop anti-AI tactics to frustrate law enforcement’s technological advances. Techniques such as generating decoy data or flooding systems with misinformation are deployed to obscure genuine criminal activity, making it harder for AI-driven monitoring tools to separate signal from noise. These evasion strategies often exploit the very strengths of AI, turning its analytical capabilities into a liability by overwhelming systems with irrelevant or misleading inputs. Additionally, encryption methods and decentralized networks further shield illicit operations from prying eyes, ensuring that even sophisticated tracking efforts hit dead ends. This cat-and-mouse game reveals the dual nature of AI as a tool that can either fortify security or erode it, depending on who wields it. As both sides refine their approaches, the dark web continues to be a proving ground for technological innovation, albeit one where the stakes involve global safety and economic stability rather than mere progress.

Navigating Ethical and Legal Quagmires

The rise of AI in dark web cybercrime introduces profound ethical dilemmas that defy easy resolution. When an algorithm autonomously executes a crime—such as deploying ransomware or generating fraudulent content—who bears accountability? Is it the developer who created the AI, the operator who unleashed it, or an intangible system beyond human control? These questions challenge existing notions of responsibility and justice in the digital age. Furthermore, the use of AI for surveillance by governments raises significant privacy concerns, particularly for legitimate dark web users like activists and journalists who rely on anonymity for safety. Striking a balance between thwarting crime and protecting personal freedoms remains an elusive goal, as every step toward tighter security risks encroaching on individual rights. This tension fuels debates among policymakers, technologists, and civil society about the moral boundaries of leveraging AI in such a contentious and opaque digital environment.

Compounding these ethical challenges are the legal hurdles surrounding AI-driven crime on the dark web. International frameworks for cybercrime lag behind technological advancements, struggling to address the borderless nature of digital offenses. Regulatory inconsistencies between nations hinder coordinated responses, allowing criminals to exploit jurisdictional gaps by operating from regions with lax enforcement. Prosecution becomes a logistical nightmare when evidence is obscured by encryption or when perpetrators remain anonymous through sophisticated AI tools. Efforts to establish global standards for accountability and data sharing face resistance due to differing priorities on privacy and security among countries. As AI continues to redefine the scope of cybercrime, the dark web exposes critical weaknesses in legal systems, pressing the need for innovative governance models that can adapt to a threat landscape where traditional rules and boundaries no longer apply with clarity or effectiveness.

Forging a Path Forward Against Digital Threats

Looking back, the integration of AI into the dark web’s criminal ecosystem had reshaped the landscape of global cybersecurity in ways that demanded urgent attention. The sophistication of self-evolving malware, deepfake fraud, and personalized phishing schemes had grown exponentially, driven by algorithms that outpaced many defensive measures. Cybercrime-as-a-Service platforms had lowered the threshold for malicious actors, while law enforcement grappled with adaptive adversaries and ethical quandaries that blurred the lines of responsibility. Legal systems had faltered in addressing the transnational scope of these threats, leaving gaps that criminals exploited with impunity. Reflecting on this era, it became evident that the dual-edged nature of AI had turned the dark web into a crucible for technological conflict, where innovation often favored the shadows over the light, challenging the very foundations of digital trust and safety across interconnected societies.

Moving ahead, addressing AI-fueled cybercrime on the dark web requires a multifaceted strategy rooted in collaboration and foresight. Governments, tech companies, and civil societies must unite to develop robust defenses, such as AI-driven threat detection systems that evolve alongside criminal tactics. International agreements should prioritize harmonized legal standards to close jurisdictional loopholes, ensuring swift prosecution regardless of where perpetrators hide. Investment in public education on digital hygiene can empower individuals to recognize and resist personalized attacks. Additionally, ethical guidelines for AI development and deployment must be established to prevent misuse while safeguarding privacy for legitimate users. By fostering innovation in cybersecurity and promoting global cooperation, the fight against dark web crime can shift from a reactive stance to a proactive one, aiming to reclaim a digital space where safety, rather than exploitation, defines the future trajectory of technological progress.