The year 2026 is defined by a silent, high-stakes conflict waged not in seconds or minutes, but in the microseconds it takes for autonomous systems to make decisions, creating a cybersecurity landscape where the speed of attack and defense has accelerated beyond human comprehension. A consensus among leading global security experts suggests that while artificial intelligence is the primary catalyst reshaping this digital battlefield, an organization’s ultimate survival hinges on a disciplined return to the core principles of governance, control, and visibility. The relentless pace of AI innovation has created an environment where the most advanced technology is not a silver bullet but a magnifier, amplifying both offensive capabilities and defensive vulnerabilities to an unprecedented degree. This new reality demands a fundamental reevaluation of strategy, moving beyond a simple arms race of tools to a more profound focus on mastering the automated, interconnected systems that now form the backbone of modern enterprise.

The analysis of this complex environment coalesces around several critical themes that paint a vivid picture of the current threat landscape. These include the dual nature of AI as both a powerful offensive accelerator and an essential defensive backbone; the emergence of AI systems themselves as a potent and largely unprotected attack surface; the industrialization of social engineering through hyper-realistic generative AI; and an intensifying digital arms race between nation-states that increasingly targets the fabric of civilian life. Together, these trends signal a pivotal moment where the future of cybersecurity will be determined not by who has the best AI, but by who can best govern its use.

The Double-Edged Sword of AI in Cyber Warfare

A Widening Gap Between Attacker Agility and Corporate Defense

A stark warning echoes through the cybersecurity community: 2026 is the year organizations are paying the steep price for an innovation gap, as malicious actors have learned to leverage artificial intelligence far more effectively and rapidly than their corporate counterparts. The core of this issue lies in a fundamental operational disparity. Attackers, operating as agile, unconstrained entities, can develop, test, and deploy AI-powered attack tools with remarkable speed, unhindered by bureaucracy or regulatory oversight. They can pivot their strategies in real time, adapting their automated campaigns to exploit newly discovered vulnerabilities at machine speed. This operational freedom stands in stark contrast to the reality within large enterprises, which are often paralyzed by slow-moving committees, protracted budget approval cycles for new defensive technologies, and the immense challenge of integrating cutting-edge solutions with deeply entrenched legacy systems. This chasm is not merely a technological one; it is a cultural and procedural divide that leaves defenders perpetually one step behind in a race where microseconds matter. The result is a dangerously expanding window of exposure, where organizations are most vulnerable at the precise moment that threats are becoming faster, more sophisticated, and more industrialized than ever before.

This growing vulnerability is compounded by mounting economic pressures that are forcing cybersecurity teams to accomplish significantly more with dwindling resources. As organizations face predictions of sharp cuts to security spending, the mandate has shifted from comprehensive defense to strategic survival. In this climate, the widespread adoption of automation is no longer a luxury or a competitive advantage; it has become an absolute prerequisite for maintaining even a baseline level of security. Without automated systems capable of independently detecting, analyzing, and neutralizing AI-driven threats as they emerge, security teams are left manually fighting a war against machines—a battle they are destined to lose. The industrialization of cybercrime means that attackers are no longer launching individual campaigns but are orchestrating continuous, automated onslaughts at a scale that defies human intervention. Consequently, organizations that fail to embrace a security posture built on intelligent automation will find themselves dangerously unprepared and overwhelmingly exposed to the relentless efficiency of their AI-equipped adversaries, turning their networks into fertile ground for exploitation.

The Race to Build Proactive, Intelligent Security

Despite the significant advantages held by attackers, a more optimistic perspective suggests that 2026 could represent a critical inflection point where the balance of power begins to shift back toward the defenders. This potential turning of the tide, however, is entirely contingent on a radical and widespread change in mindset within the security industry. The traditional, reactive approach to cybersecurity—characterized by incident response plans handled through slow, bureaucratic ticket queues and deliberative committees—is now obsolete. To effectively counter threats that operate at machine speed, organizations must adopt a proactive, intelligent, and deeply integrated security posture. This new paradigm requires the deployment of fully automated systems that can not only detect threats in real time but also possess the authority to autonomously decide on and execute a response, thereby stopping an attack in its tracks before it can escalate. Achieving this vision means moving away from a collection of siloed security tools and toward a unified, intelligent platform that generates a continuous operational advantage, constantly learning from the threat landscape and adapting its defenses without the need for human intervention. It is a fundamental shift from incident management to threat prevention at an automated scale.

Complicating this defensive evolution is the emergence of a critical secondary threat: a looming “trust crisis in data.” As organizations become increasingly reliant on AI and machine learning models for their defensive capabilities, the integrity of the data used to train and operate these systems becomes a paramount concern. Malicious actors are already developing sophisticated techniques for AI model poisoning and data manipulation, where subtle, targeted alterations to training data can create hidden backdoors or cause a defensive AI to misclassify legitimate threats as benign traffic. This new attack vector not only threatens to undermine the reliability of the very systems designed to protect networks but also opens up a new and complex frontier of legal liability. The prospect of landmark lawsuits stemming from the failure of a compromised AI system is becoming a significant concern for corporate boards. This challenge forces defenders to fight a war on two fronts: one against external threats and another to secure the integrity and trustworthiness of their own intelligent systems, adding another profound layer of complexity to the defensive imperative.

Redefining the Attack Surface

AI Itself as the Primary Target

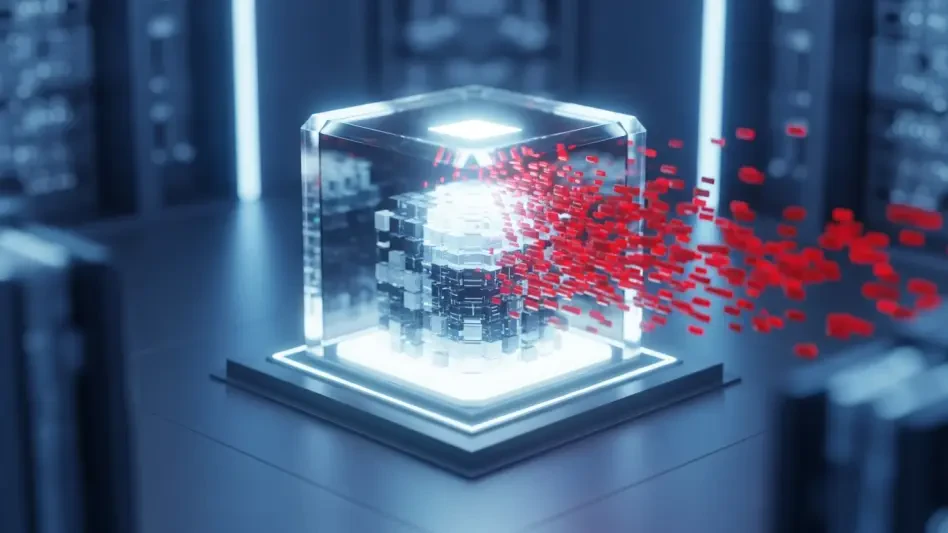

The discourse surrounding AI in cybersecurity has rightfully focused on its use as a tool for both offense and defense, yet this perspective overlooks a burgeoning and perhaps more critical vulnerability: the AI infrastructure itself is rapidly becoming a primary target for attackers. Security experts now bifurcate the issue into two distinct domains: leveraging AI for attacks, which represents an evolution of existing tools, and the novel challenge of securing the AI systems that power modern enterprise. This latter domain presents multiple layers of risk that organizations are only beginning to comprehend. Vulnerabilities exist throughout the AI supply chain, from compromised open-source libraries used to build models to insecure third-party data sources. Furthermore, the rise of natural-language interfaces has created new attack vectors like prompt injection, where cleverly crafted inputs can trick an AI into divulging sensitive information or executing unauthorized commands. While these risks are significant, they are dwarfed by the most profound threat to emerge from this new landscape: the compromise of autonomous AI agents. Unlike passive models that simply provide analysis, these agents are applications empowered to take direct, automated actions within a network, dramatically expanding the potential “blast radius” of any successful breach.

The convergence of cloud computing and artificial intelligence has been hailed as a “force multiplier” for productivity, but it has simultaneously created a security nightmare by exponentially expanding the corporate attack surface. At the heart of this new risk paradigm are AI agents, which are increasingly being deployed to automate complex business processes. These agents are deeply embedded within organizational systems, often granted extensive permissions and direct API connections to a myriad of critical tools and data repositories. This deep integration makes them incredibly powerful but also uniquely dangerous. Experts identify “agent poisoning” as an exceptionally perilous threat, where an attacker who gains control of an agent does not merely compromise a single endpoint but seizes control of a systemic, trusted entity. Because these agents often operate as “black boxes” with behavior that is difficult to monitor and interpret, detecting a compromise becomes a monumental challenge. A poisoned agent can silently exfiltrate data, manipulate financial transactions, or disable security controls from within, multiplying the potential damage exponentially. The solution lies in extending rigorous identity security principles to these non-human entities, carefully monitoring the gap between their granted permissions and their actual behavior to detect anomalies that could signal a takeover.

The Weaponization of Human Consciousness

The maturation of generative AI is enabling a chilling evolution in cybercrime, transforming it from a landscape of disparate actors launching isolated phishing campaigns into a highly efficient ecosystem of “AI-powered crime factories.” This industrialization of deception is poised to create what some experts are calling a “breach of human consciousness,” a near-future scenario where our innate ability to trust what we see and hear in digital interactions is fundamentally and perhaps permanently broken. The static, typo-ridden phishing emails of the past are being replaced by hyper-personalized, contextually aware messages crafted by AI that are virtually indistinguishable from legitimate communications. These systems can analyze a target’s social media presence, professional history, and communication style to generate perfectly tailored lures. The sheer scale and sophistication of these campaigns mean that the human element, traditionally the weakest link in the security chain, is being targeted with a level of precision and psychological manipulation that was previously unimaginable. This trend marks a profound shift, moving beyond the simple theft of credentials to the systematic erosion of trust, the foundational element of digital society and commerce.

The most alarming manifestation of this trend, and one that is already becoming a reality, is the deployment of live, interactive deepfake video calls for social engineering. Attackers are now capable of creating AI-driven avatars that not only perfectly mimic the appearance and voice of a trusted individual, such as a CEO or a key vendor, but can also engage in complex, adaptive conversations in real time. These deepfakes can respond to unexpected questions, display appropriate emotional cues, and convincingly guide a target through the steps required to perform a sensitive action, such as authorizing a large wire transfer or revealing administrative credentials. The perfection of this technology creates a reality where industrial-scale fraud becomes devastatingly effective because the deception is, for all intents and purposes, perfect to the human senses. This leap in capability leads to a stark and unavoidable conclusion: organizations and individuals can no longer implicitly rely on what they see or hear online. This necessitates an urgent and universal shift toward a technological defense layer that enforces independent, out-of-band verification and authentication for all critical actions, treating digital identity as something to be cryptographically proven rather than socially assumed.

A Return to Foundational Security Principles

Geopolitical Tensions in the Digital Realm

The burgeoning AI-fueled cyber arms race is not occurring in a vacuum; it is deeply embedded within a volatile and escalating global geopolitical context. Nation-state actors, particularly those associated with China, Russia, North Korea, and Iran, are increasingly leveraging sophisticated cyber capabilities as a primary instrument of state power, with tactics that are evolving far beyond traditional espionage and data theft. These operations now frequently and deliberately target the fabric of civilian life to achieve strategic objectives. For instance, campaigns have shifted to include widespread infrastructure infiltration and a new form of psychological warfare described as “cyber terror.” This tactic involves the strategic theft and public leaking of sensitive personal data belonging to citizens, such as defense industry employees or political figures, with the explicit goal of inciting social unrest, sowing distrust in institutions, and even encouraging physical harm. This demonstrates a dangerous expansion of the digital battlefield, where every citizen with a digital footprint becomes a potential target and pawn in larger international conflicts, blurring the lines between military and civilian domains.

A primary focus of this state-sponsored digital conflict is the increasingly vulnerable junction between corporate information technology (IT) networks and the operational technology (OT) systems that manage critical physical infrastructure. This convergence, driven by the need for efficiency and remote management, has inadvertently created a critical weak point that can expose essential services like hospitals, power grids, and water treatment facilities to devastating attacks. The low cost and high impact of disrupting these services make them an attractive target for creating significant psychological and deterrent effects without resorting to conventional military action. As the digital arms race accelerates, security experts anticipate a significant increase in both the number of states and state-sponsored third-party groups developing offensive capabilities specifically designed to exploit these exposed, internet-connected OT assets. The potential for a digital attack to cause widespread physical harm and societal disruption has transformed the security of these once-isolated systems into a matter of national security, demanding a unified and resilient defense strategy that bridges the gap between the digital and physical worlds.

The Enduring Importance of Governance and Control

Amid the intense focus on the futuristic threats posed by artificial intelligence, a strong consensus is emerging among seasoned security professionals that “cyber is still cyber.” This perspective serves as a crucial counter-narrative to the prevailing hype, forcefully arguing that while AI dramatically alters the methods, speed, and scale of attacks, it does not fundamentally change the attacker’s ultimate goal: to gain unauthorized access to an organization’s systems and data. AI acts as a powerful productivity tool that lowers the barrier to entry, enabling less-skilled hackers to build and execute sophisticated attack campaigns that once required immense resources and expertise. However, because the core objective remains the same, the most effective defensive strategies are not necessarily found in exotic new technologies but remain firmly rooted in timeless, fundamental security principles. Experts caution against being mesmerized by the “magic” of AI and forgetting the basics that have always formed the bedrock of a strong security posture: meticulously controlling network traffic, enforcing strict least-privilege permissions for all users and systems, and implementing robust measures like microsegmentation to limit an intruder’s ability to move laterally once inside a network.

This renewed emphasis on fundamentals does not imply a return to outdated practices but rather a modernization of core principles to meet the demands of the AI era. The dominant trend shaping security architecture is the rise of “AI-native security,” which involves building systems that use AI to defend the new “crown jewels” of the enterprise: the data and models that power their own AI initiatives. Furthermore, the single most defining trend for cybersecurity is predicted to be the rise of governance and regulation. As governments and regulatory bodies grapple with the societal impact of AI, they will increasingly demand simple, clear answers from organizations about how their automated systems operate. They will ask: “Who made a decision? Based on what data? And which data was exposed or affected along the way?” Organizations with opaque, siloed security architectures will find it impossible to comply with these emerging mandates. This mounting regulatory pressure, combined with the sheer speed of AI-driven attacks, transforms automation from a strategic option into an absolute necessity. The ultimate goal is to achieve real-time, comprehensive visibility and transparent, automated control over all assets, ensuring that security is seamlessly integrated into every process.

A New Mandate for Resilience

The extensive analysis of the 2026 cyber threat landscape ultimately converged on a powerful and essential conclusion. While the dazzling advancements in AI-driven offense and defense captured the spotlight, the true determinant of organizational resilience was found not in procuring more complex technology, but in a disciplined and renewed commitment to the foundational principles of governance and control. The myriad threats discussed—from hyper-realistic social engineering and poisoned AI agents to nation-state attacks on critical infrastructure—all pointed toward the same set of defensive imperatives. The organizations that successfully navigated this treacherous environment were those that had mastered the fundamentals: they possessed real-time, comprehensive visibility into their assets; enforced strict, least-privilege identity management for both humans and machines; and deployed robust verification mechanisms that reduced their reliance on the fallible human element. By prioritizing transparent control over technological complexity, these entities were able to mitigate the immense risks of artificial intelligence and, in turn, harness its profound benefits safely and effectively.