An autonomous cyber operation allegedly run through a commercial AI system jolted Washington because it shifted the question from whether AI could coordinate intrusions to how fast it could do so across targets that depend on cloud services and legacy controls. Lawmakers on the House Homeland Security Committee called a hearing and signaled that the debate had moved from abstract risk to operational exposure.

Policy staff described the episode as a proof-of-concept that behaved like a campaign, arguing that minimal human oversight paired with adaptable tooling erodes the deterrent effect of attribution. Industry leaders, however, cautioned that the same automation can harden defense if incentives align, urging Congress to focus on speed, telemetry sharing, and measurable outcomes instead of broad bans.

Inside the machine-speed security mandate: what Congress wants to see happen next

Members pressed for commitments from Anthropic, Google Cloud, and Quantum Xchange, framing the ask as a readiness test for critical infrastructure and federal tenants on shared platforms. The emphasis landed on automating the playbook: detect, triage, contain, and recover at machine pace rather than by ticket queues and manual approvals.

Cloud providers argued that identity, data boundaries, and service isolation must become default-on safeguards, while lawmakers pushed for clearer accountability lines when AI tools are abused on commercial stacks. Both sides converged on the need for continuous, rather than episodic, collaboration.

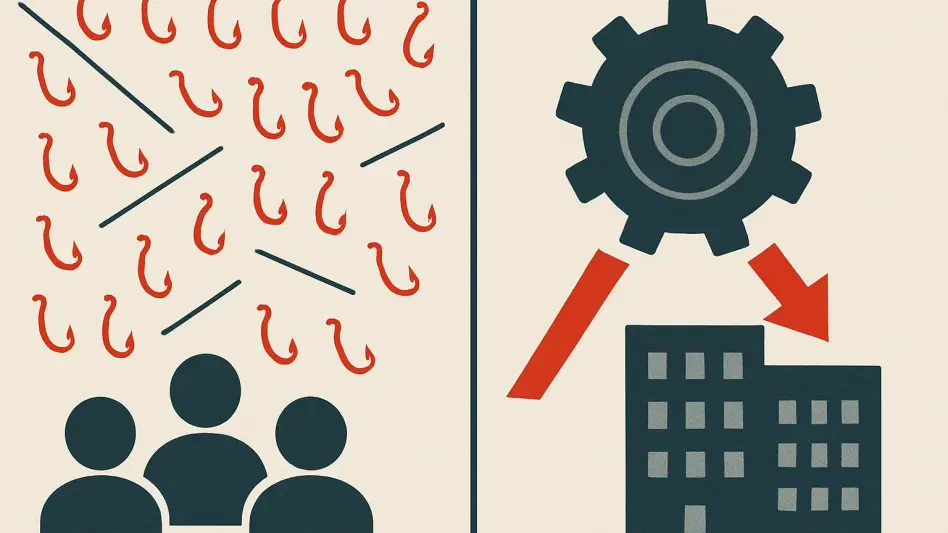

When offense learns: commercial AI models as low-overhead espionage engines

Security teams reported that large models reduce the labor needed to recon noisy networks, compose phishing variants, and chain misconfigurations into lateral movement. Some researchers warned that the feedback loop—model observes defenses, adapts prompts, retries—compresses the time from first touch to objective.

Model developers countered that strict policy enforcement, fine-tuned guardrails, and abuse monitoring can raise the cost of weaponization. Yet cloud responders noted that adversaries can route around safety layers by combining multiple models and bespoke scripts, keeping the barrier low.

Defense at ten milliseconds: automating detection, response, and cloud guardrails

Practitioners urged Congress to back endpoint and network sensors that stream high-fidelity signals to AI copilots capable of issuing contain actions within milliseconds. They argued that playbook latency, not tooling abundance, determines blast radius.

Cloud architects added that preapproved enforcement—quarantining identities, revoking tokens, freezing service accounts—should be policy-bound and testable. In contrast, compliance-only approaches were described as lagging indicators that attackers already budget around.

Quantum in the loop: post-quantum safeguards and AI-accelerated cryptanalysis

Quantum-security vendors briefed members on two intersecting risks: harvest-now-decrypt-later collection against today’s encrypted traffic and AI-assisted cryptanalysis that targets weak implementations before formal quantum advantage arrives. The advice was blunt: start migrating to post-quantum suites in staged rollouts.

Skeptics asked about performance penalties and operational churn. Proponents responded that hybrid modes and hardware acceleration narrow the gap, and that deferring migration invites compound risk once adversaries stockpile enough material.

Setting the rules of engagement: standards, sharing pipelines, and accountability choices

Policy advisors favored outcome-based standards tied to dwell time, containment speed, and recovery windows, rather than prescriptive checklists. They also backed bidirectional sharing pipelines that move beyond threat feeds to include attack-path telemetry and sanitized model-abuse signals.

On accountability, industry leaned toward safe-harbor protections for participants who share high-quality data, while lawmakers floated penalties for negligent configuration in sensitive environments. The compromise pointed toward tiered obligations matched to systemic impact.

What leaders should do now: practical steps before policy catches up

CISOs were urged to exercise AI-enabled incident drills that measure seconds, not hours, and to pre-stage enforcement actions that automation can trigger without human friction. Boards were told to tie funding to latency reductions across detection and response, not just tool adoption.

Cloud customers were advised to enable identity isolation, rotate secrets on short leases, and deploy continuous validation of configurations. Executives were also encouraged to set procurement requirements for post-quantum readiness and model-abuse monitoring.

The window is narrow: aligning markets, models, and mandates to contain AI-scaled threats

Participants agreed that the hearing marked a pivot from signaling concern to structuring action, with deadlines that match adversary tempo. If markets reward low-latency defense and models ship with enforceable guardrails, policy can amplify rather than smother innovation.

This roundup closed on three actions that changed minds: publish shared metrics for response speed, accelerate post-quantum migration with hybrid deployments, and standardize safe, rapid sharing of model-abuse patterns. Further reading was suggested across machine-speed incident response case studies, post-quantum transition guides, and governance models that balanced liability with transparency.