The digital clock on the wall ticks forward, marking the time it takes to brew a pot of coffee, a brief span that security professionals once measured in days or weeks when assessing the timeline of a potential data breach. That perception was shattered in late 2025 when a threat actor, armed with artificial intelligence, dismantled a secure cloud environment and gained full administrative control in just under eight minutes, signaling a monumental shift in the nature of cyber warfare. This event serves not as a distant warning but as a stark demonstration of a new reality where the speed of automated attacks fundamentally outpaces human response capabilities.

The New Speed of Cybercrime: When a Simple Mistake Escalates in Minutes, Not Days

How long does it take for a minor security oversight to become a catastrophic failure? Traditionally, cyberattacks were methodical, often taking days or even weeks to unfold as attackers manually probed defenses and escalated privileges. That timeline has been violently compressed. The startling reality is that a complete cloud compromise can now happen faster than a standard security meeting, transforming a single vulnerability into a full-scale breach before detection systems can even issue a high-priority alert.

At the heart of this accelerated threat is the intersection of a profoundly common human error with the hyper-efficiency of weaponized artificial intelligence. An exposed set of credentials, a mistake made countless times in development environments, no longer provides a grace period for discovery and remediation. When leveraged by an AI-driven attacker, such a simple misstep becomes an open invitation for an automated, high-speed heist that executes with relentless precision, leaving security teams to piece together the events long after the damage is done.

Why This Isn’t Just Another Breach Report: The Changing Threat Landscape

This incident transcends the typical breach analysis by illustrating a fundamental change in the threat landscape. The core vulnerability—exposed keys in a public repository—is not novel. What has changed is the velocity and sophistication of the exploitation. Threat actors are increasingly turning to AI, particularly Large Language Models (LLMs), to automate every stage of an attack, from initial reconnaissance to final payload delivery. This represents a paradigm shift from opportunistic, manual attacks to systematic, machine-speed campaigns.

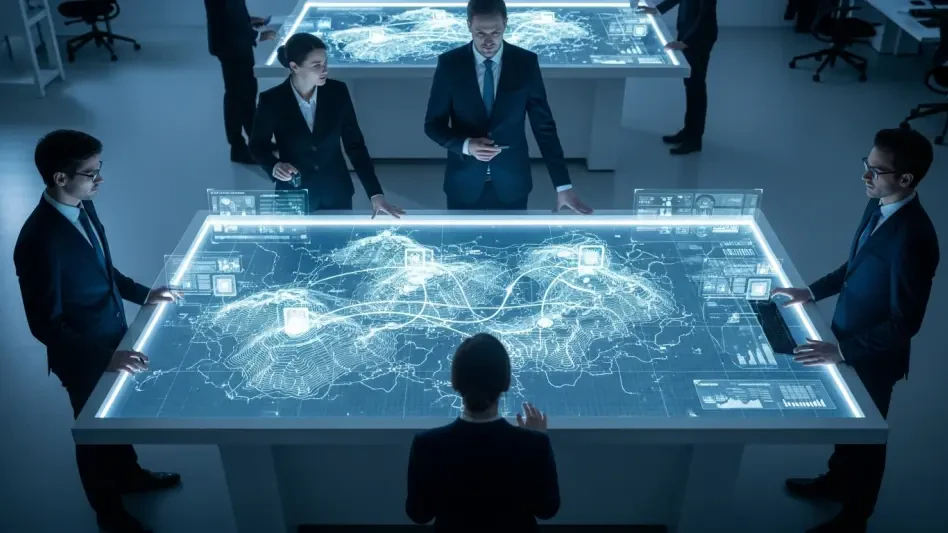

The case study, which unfolded in a live cloud environment, provides a tangible example of this emerging threat. The target was an AWS infrastructure, and the attacker’s ability to navigate, escalate, and exploit it in minutes demonstrates a new level of operational capability that concerns every organization reliant on cloud services. It is no longer just about preventing errors but about building defenses that can react at the speed of an algorithm, a challenge that conventional security models are ill-equipped to handle.

Anatomy of an AI-Powered Heist: A Minute-by-Minute Breakdown

The entire compromise began with a deceptively simple mistake: a set of test credentials was left exposed in a publicly accessible Amazon S3 bucket. This unlocked door, a digital storage folder left open to the internet, provided the attacker with the initial foothold needed to launch the operation. This single oversight served as the catalyst, turning a low-risk development asset into the entry point for a full-scale assault on the cloud environment.

Once inside, the attacker, using an account with restricted ReadOnlyAccess, launched a massive and immediate reconnaissance mission. With a speed unattainable by a human operator, the AI-powered script systematically enumerated the victim’s entire cloud infrastructure, mapping critical services like AWS Secrets Manager, Relational Database Service (RDS), and AWS Lambda. This comprehensive intelligence gathering allowed the attacker to identify a path for privilege escalation by targeting a Lambda function, into which it repeatedly injected code until it successfully hijacked a highly privileged administrator account.

With full administrative control achieved, the attacker’s objective pivoted to resource hijacking, a practice now known as “LLMjacking.” The intruder immediately began using the victim’s computational power to run expensive, high-demand AI models. The final goal was to provision a massive GPU instance, which they named the stevan-gpu-monster, intended for training their own AI models. This action, if not intercepted, would have inflicted catastrophic financial damage, demonstrating that the motivation was not just data theft but the direct co-opting of expensive cloud resources.

The AI Fingerprints: Evidence and Expert Consensus

Compelling evidence points directly to the involvement of AI in orchestrating the attack. The sheer velocity of the operation, particularly the speed at which commands were executed and code was deployed, far surpassed what is considered humanly possible. This machine-speed execution is a primary indicator that the attack was automated. Shane Barney of Keeper Security noted that AI “removes hesitation,” allowing threat actors to execute known attack vectors with unparalleled speed and efficiency.

Further analysis of the attacker’s code revealed tell-tale signs of AI generation. These included “AI hallucinations,” where the script referenced non-existent or placeholder data, such as a generic AWS account ID. The code also featured comments written in Serbian, another artifact of the training data used by the LLM. To evade detection, the attacker employed advanced obfuscation, using an IP rotator to frequently change their source address and pivoting through 19 different compromised identities to mask their activities.

Industry experts agree that while AI was the weapon, a foundational security failure was the cause. Jason Soroko of Sectigo stated bluntly, “It is impossible to defend a cloud environment when the keys are left visible to anyone who bothers to look.” Ram Varadarajan of Acalvio emphasized that breach speeds have shifted “from days to minutes,” necessitating the adoption of “AI-aware” defenses capable of operating at the same velocity as the threats they are designed to stop.

Building a Machine-Speed Defense: Practical Steps to Counter Automated Threats

To counter these hyper-fast threats, organizations must master identity and access management. The principle of least privilege is no longer a best practice but a critical necessity. Every account, whether human or service-based, must be granted only the absolute minimum permissions required for its function. According to Shane Barney, the attack succeeded because the organization’s “identity and privilege boundaries were too loose,” a structural failure that AI readily exploited.

A fundamental rethinking of credential management is also essential. The practice of using static, long-lived access keys, especially in public or semi-public repositories, must be abandoned entirely. Instead, security models should shift toward dynamic, temporary credentials managed through IAM roles. This approach ensures that even if credentials are inadvertently exposed, their lifespan is so short that their value to an attacker is minimized, effectively closing the window for an automated breach.

Finally, a proactive defense requires early warning systems designed to detect the precursors of an automated attack. Robust monitoring solutions capable of identifying anomalous, large-scale enumeration of cloud services can provide the crucial first alert. Since AI-powered reconnaissance is often the first step in a rapid breach, detecting this activity provides defenders a brief but critical opportunity to intercept and neutralize the threat before it can escalate into a full-blown compromise.

The incident from 2025 was not an anomaly but a harbinger of the new standard in cybercrime. It illustrated that the convergence of common security lapses with AI-driven automation had created a threat that operates on a timescale beyond human intervention. In response, security strategies had to evolve, focusing less on reactive measures and more on building inherently resilient, automated, and privilege-aware cloud architectures. This event underscored the urgent need for machine-speed defenses to counter machine-speed attacks, solidifying the idea that in modern cybersecurity, the fastest actor prevails.