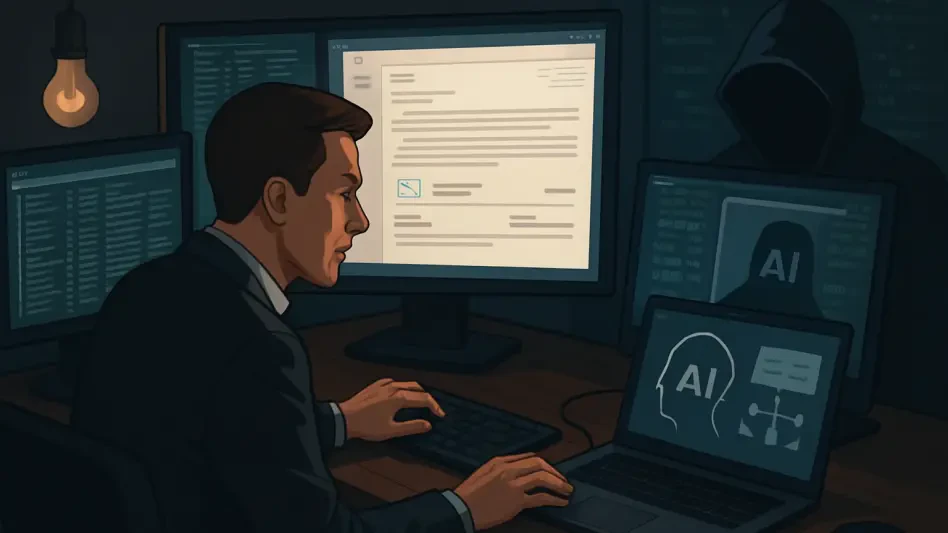

SLH Hackers Recruit Women to Enhance Vishing Operations

The sophistication of voice-phishing has reached a critical threshold as organized cybercriminal syndicates move away from brute-force methods toward refined psychological manipulation. The hacking collective known as Scattered Lapsus$ Hunters,…

Read More